The process of data annotation enables the creation of precise AI models through the process of labeling unprocessed data. The framework provides essential elements for model reliability and scalability which results in a positive return on investment for successful machine learning projects.

The combination of Artificial Intelligence (AI) and Machine Learning (ML) technologies transforms various business sectors through their use of precise data annotation systems. The systems which include healthcare diagnostics and autonomous driving need accurate data annotation to function properly.

Contents

- What is Data Annotation?

- Popular Types of Data Annotation Techniques

- Why Data Annotation is Crucial for Machine Learning & AI

- Data Annotation Best Practices

- Challenges of Data Annotation in AI/ML Projects

- Outsourcing Data Annotation: A Strategic Advantage

- Key Industries Benefiting from Data Annotation

- The Future of Data Annotation in AI/ML

- Conclusion

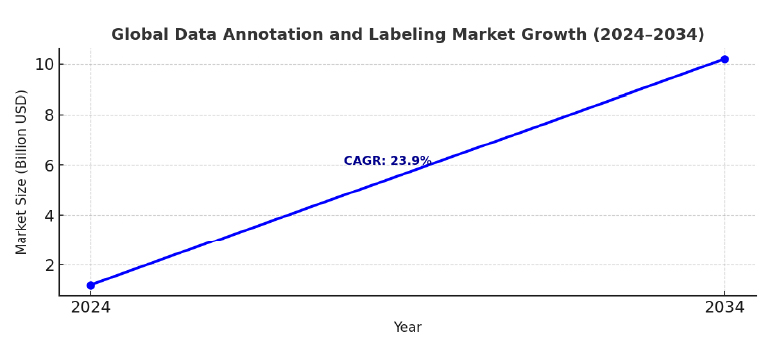

The most sophisticated algorithms generate unreliable results when they lack properly structured and labeled data. The data annotation and labeling market will expand from $1.2 billion in 2024 to $10.2 billion by 2034 at a 23.9% Compound Annual Growth Rate (CAGR). The increasing market value demonstrates how crucial data annotation has become for achieving AI success.

AI/ML companies need high-quality annotation as their base for developing scalable profitable innovations.

Looking to scale your AI projects with precision? Explore professional data annotation services.

What is Data Annotation?

Data annotation involves the process of adding labels or annotations to data which helps to provide context and meaning. Machine learning models require data annotation to understand the information they receive during training. AI models require annotated data as their base for training because it enables them to learn from the information and generate precise predictions or decisions. The accuracy and dependability of models depends directly on the quality of their annotations. The development and deployment of AI systems depends on data annotation as their essential foundational step.

The process of data annotation involves adding labels to unprocessed data including text and images and audio and video and sensor inputs for machine interpretation.

Teaching someone about apples would be similar to showing a child an apple while saying the word “apple.” The repeated exposure will eventually lead them to identify apples in any setting. Annotation does the same for machines.

Types of data requiring annotation:

- Text: Entity tagging for entities and intent and sentiment analysis.

- Images: Labeling specific objects and regions and individual pixels within images.

- Videos: Tracking video movements through a frame-by-frame analysis.

- Audio: Identifying speakers and their spoken words and detect emotional signals.

- LiDAR/Sensor data: Classifying 3D environments.

The Forbes publication shows that AI project work needs more than 80% of its total time for data preparation and labeling tasks. All AI systems require foundational annotation as their base operational structure to function.

Popular Types of Data Annotation Techniques

AI models need specific data annotation techniques which match the requirements of various business domains. The following evaluation provides a detailed assessment of the provided text.

Image Annotation

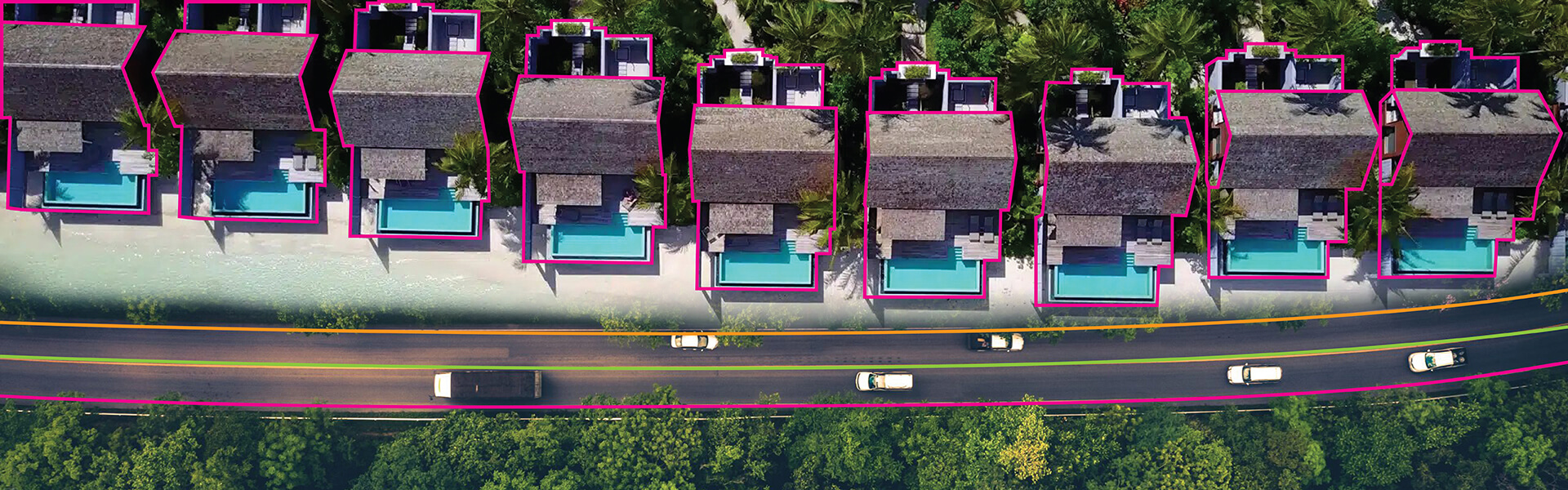

AI models need image annotation to detect and organize objects in static visual data.

- Bounding Boxes: Fast and efficient; widely used for object detection like cars or animals.

- Polygons: The detection of irregular shapes becomes more precise through polygons than through rectangles for objects including roads and rivers and medical image tumors.

- Semantic Segmentation: The technique of semantic segmentation labels each pixel to distinguish between background elements and foreground objects and to identify multiple objects that overlap with each other.

- Instance Segmentation: The system performs instance segmentation which enables the identification of separate objects that belong to the same class (e.g. multiple people in one image).

- Keypoint Annotation: The process of keypoint annotation requires users to draw facial landmarks and body joints for the purpose of enabling both pose estimation and gesture recognition.

The applications of image annotation technique include medical diagnostics for tumor detection as well as quality control in manufacturing and eCommerce product tagging and biometric authentication systems.

By partnering with HabileData for data annotation, the client enhanced their machine learning models for accurate food wastage analysis. The detailed annotations provided helped improve business performance, allowing the client to assess food waste more effectively and build credibility in the market.

Video Annotation

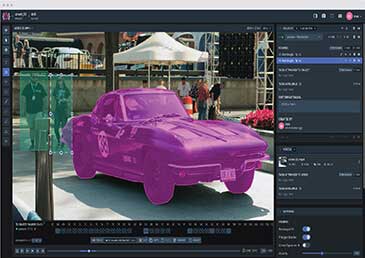

Video annotation requires unique processing because it must handle both video movement and time-dependent information.

- Frame-by-Frame Labeling: The process of labeling objects in each frame of a video sequence is called frame-by-frame labeling. Annotators apply this method to monitor the transformations of objects between successive frames.

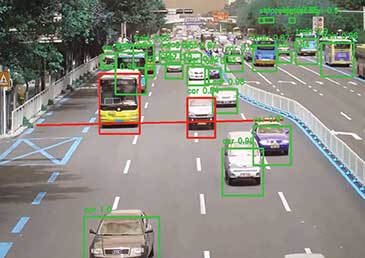

- Object Tracking: The system tracks moving objects between multiple frames by following a pedestrian as an example.

- Event Annotation: The specific events in the video are labeled as car accidents and handshakes and falls.

- Temporal Segmentation: The system uses temporal segmentation to split video content into distinct segments which allows for targeted evaluation.

The applications of video annotation technique includes autonomous driving for pedestrian and road sign detection and sports analytics and public safety crowd monitoring and security surveillance.

By annotating both pre-recorded and live video streams of vehicles, HabileData provided critical training data for machine learning models, enabling a California-based data analytics company to enhance traffic management. This video annotation solution improved traffic flow analysis, safety, and operational efficiency through real-time categorization and annotation of vehicles.

Text Annotation

Machines gain natural language comprehension through text annotation which adds meaning to words and phrases and complete documents.

- Name Entity Recognition (NER): Name Entity Recognition (NER) identifies proper nouns and medical terms and financial codes which it then labels. The example demonstrates how to tag “Pfizer” as an organization and “Aspirin” as a drug.

- Sentiment Analysis: The process requires to mark particular phrases or sentences with their emotional value which can be positive, negative or neutral. It is useful for customer service operations as well as social media tracking and brand management activities.

- Intent Annotation: Detects user queries based on their purpose which falls into three categories: purchase, learn or complain. It is essential for chatbots and voice assistants.

- Semantic Annotation: The process of semantic annotation requires adding metadata to enhance context which results in improved search engine performance and recommendation engine results.

The applications of text annotation technique includes virtual assistants (Siri, Alexa) and intelligent search systems and patient record classification in healthcare and compliance automation in finance.

A perfect data annotation workflow helped the client significantly reduced data processing time and improved the accuracy of their AI model. Over 10,000 construction-related articles were annotated and verified, enhancing customer acquisition and cutting project costs by 50% while enabling more precise data analysis for their real estate platform.

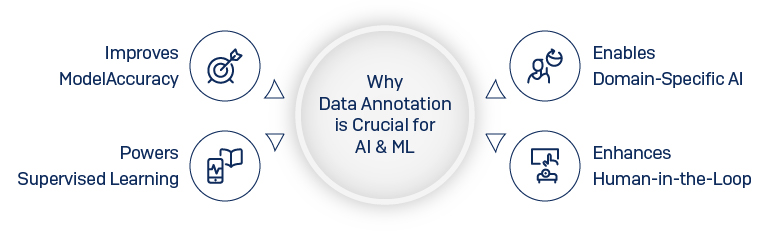

Why Data Annotation is So Crucial for Machine Learning & AI

AI models demonstrate an inability to correctly understand unprocessed data. Data annotation serves as the link between data and human comprehension through its process of converting unprocessed data into workable information which generates useful outcomes.

1. Improves Model Accuracy

The quality of annotations serves as the starting point for obtaining precise predictions. The use of improperly labeled datasets results in incorrect positive results and classification errors and biased system results.

- Example: The incorrect annotation of an X-ray in medical AI systems can result in a wrong cancer diagnosis.

- Stat: Gartner found that 25% of AI projects fail due to poor-quality data.

2. Enables Domain-Specific AI Applications

Different industries require different annotations.

- Healthcare: MRI scans enable healthcare professionals to detect medical problems that standard human vision cannot identify through radiologist annotations which benefit the healthcare industry.

- Autonomous Vehicles: The training of autonomous vehicles needs millions of annotated frames to learn traffic signal recognition and pedestrian detection and road layout understanding.

- Retail: Annotated product images and reviews enable accurate product recommendations.

AI models reach industry-readiness through domain-specific annotations that extend their use from experimental to practical applications.

3. Supports Supervised Learning at Scale

Supervised learning needs training data which contains labeled input-output pairs. The models operate without annotations which results in their inability to find reference points.

- Example: The process of training an AI system to distinguish between “cat” and “dog” needs thousands of examples that have been labeled.

- Benefit: The number of annotations directly affects the strength of generalization because more annotations produce more robust results across different situations.

4. Enhances Human-in-the-Loop Systems

The process of continuous improvement receives support through annotation.

- The human operator reviews model predictions and delivers feedback to the system.

- The system receives corrected data which undergoes re-annotation before returning to the system.

- The cycle enables AI systems to learn from fresh real-world situations which appear in the world.

Result: Higher adaptability and long-term reliability.

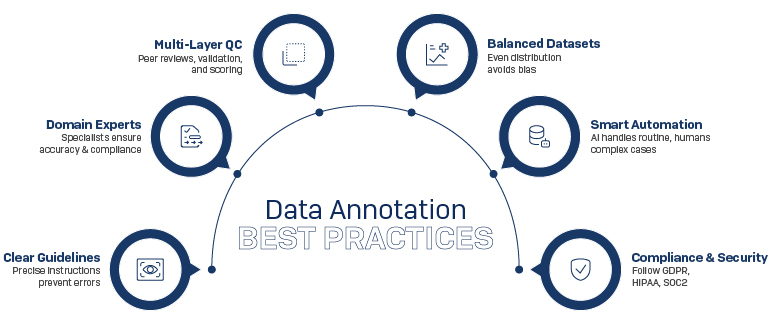

Data Annotation Best Practices

The absence of correct annotation leads to wasted resources and financial expenses and prolonged work duration. Best practices implementation leads to exact results and efficient operations which maintain regulatory compliance.

- Define Clear Guidelines: The process needs particular guidelines which contain exact instructions to stop any potential misinterpretations.

- Use Domain Experts: The organization requires domain experts for sensitive data management because it includes radiologists and compliance officers.

- Implement Multi-Layer Quality Control: The system requires multiple stages of quality control assessment which combine peer evaluation with validation assessment and agreement scoring for validation verification.

- Maintain Dataset Balance: The system needs to distribute data evenly across all categories to stop results from becoming biased.

- Integrate Automation Strategically: Use AI-assisted tools for repetitive tasks while humans handle complex cases.

- Ensure Compliance and Security: The system requires GDPR and HIPAA and SOC2 standards for data protection through robust security protocols to maintain compliance and security.

MIT Sloan found that data errors can reduce AI performance by up to 30%. The implementation of best practices serves to stop failures while protecting return on investment.

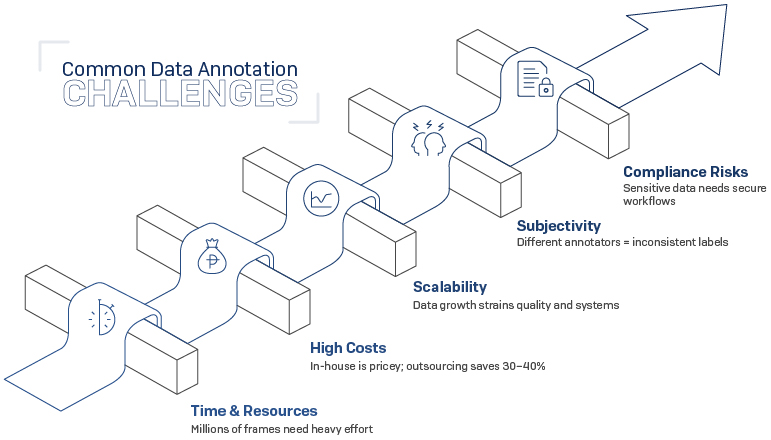

Challenges of Data Annotation in AI/ML Projects

The achievement of annotation goals faces multiple obstacles which make the process more difficult.

- Time and Resource Intensity: Big data management requires large financial and time-based resources to perform its operations. Training autonomous vehicles can require annotating millions of frames each month.

- High Costs: In-house annotation is expensive due to salaries, infrastructure, and compliance demands. Businesses can reduce their operational costs by 30-40% through outsourcing yet maintain their service quality levels.

- Scalability Issues: The implementation of AI technology leads to quick data growth which creates problems with system scalability. The process of maintaining quality standards during the management of millions of annotations proves to be an ongoing battle.

- Human Subjectivity: The process of human annotation becomes unreliable because different annotators tend to interpret the same data points in their own unique ways. The two annotators might assign different labels to the same face image with one person marking it as “happy” and the other person marking it as “neutral”. Organizations achieve process automation through strict guidelines which work together with proper training to minimize human involvement.

- Compliance and Security Risks: Healthcare and finance and defense projects manage highly sensitive information that creates both compliance and security risks. Organizations face legal penalties and harm to their corporate reputation because of errors in data management. Secure workflows and compliance checks are critical.

Outsourcing Data Annotation: A Strategic Advantage

Outsourcing serves as a solution which addresses all these problems.

Benefits for AI/ML Companies:

- The current data shows that outsourcing operations results in expense savings which range from 30% to 40% according to current data.

- The companies let users reach out to worldwide experts who have deep knowledge in their specific fields of expertise.

- The companies allow for quick annotation of millions of data points because of its scalable team-based design.

- The project completion time becomes shorter when you outsource work.

- The partner companies maintain data security through adherence to worldwide data protection standards.

Startups achieve major cost reductions by outsourcing their annotation work instead of creating their own annotation teams.

Key Industries Benefiting from Data Annotation

- Healthcare: AI systems can detect diseases in their early stages through the integration of annotated medical images with genomic data which also accelerates drug development for new treatments. IBM Watson systems achieve their high diagnostic precision through the use of radiologist-labeled datasets.

- Autonomous Vehicles: The training of self-driving cars through LiDAR and radar and video annotations enables them to detect pedestrians and traffic signs and road conditions in various settings which include urban areas and high-speed roads under different weather conditions.

- Retail & eCommerce: Product tagging and catalog labeling and sentiment annotation of customer reviews improve search accuracy and generate personalized recommendations and decrease fraudulent returns.

- Finance: The implementation of annotated documents and transaction data in finance produces three main advantages which include improved fraud detection and automated risk evaluation and streamlined compliance operations that support regulatory requirements.

- Agriculture: The combination of drone imagery with soil analysis and climate data annotation through annotation enables precision farming to detect pests and monitor crop health and predict yields with enhanced precision.

The Future of Data Annotation in AI/ML

Annotation is evolving with AI itself:

- Semi-Supervised Learning: Models learn from fewer labeled examples.

- Synthetic Data Annotation: AI-generated datasets augment real-world data. McKinsey predicts 70% of enterprise AI will use synthetic data by 2030.

- AI-Assisted Annotation: Pre-labeling reduces the amount of work that humans need to do.

- The Human + AI: Annotation company achieves large-scale precision through its hybrid method which unites human expertise with AI speed.

Conclusion

Data annotation is the backbone of every successful AI and machine learning project. The most advanced algorithms produce no reliable or accurate or scalable results when working with datasets that lack proper labeling.

The implementation of annotated data results in particular industry solutions that generate quantifiable investment returns through medical diagnosis systems and self-driving cars and financial crime prevention applications. The implementation of best practices through clear guidelines and expert involvement and balanced datasets and strong compliance systems enables businesses of all sizes to achieve high annotation quality when outsourcing data labeling.

AI adoption speed will drive up the need for exact and large-scale annotation work. Organizations that dedicate resources to strong annotation methods now will develop AI systems which become more intelligent and adaptable for future needs.

If you want your AI to perform at its full potential, start by strengthening its foundation: accurate, secure, and scalable data annotation.

Start Your Free Trial Today! »

HabileData is a global provider of data management and business process outsourcing solutions, empowering enterprises with over 25 years of industry expertise. Alongside our core offerings - data processing, digitization, and document management - we’re at the forefront of AI enablement services. We support machine learning initiatives through high-quality data annotation, image labeling, and data aggregation, ensuring AI models are trained with precision and scale. From real estate and ITES to retail and Ecommerce, our content reflects real-world knowledge gained from delivering scalable, human-in-the-loop data services to clients worldwide.