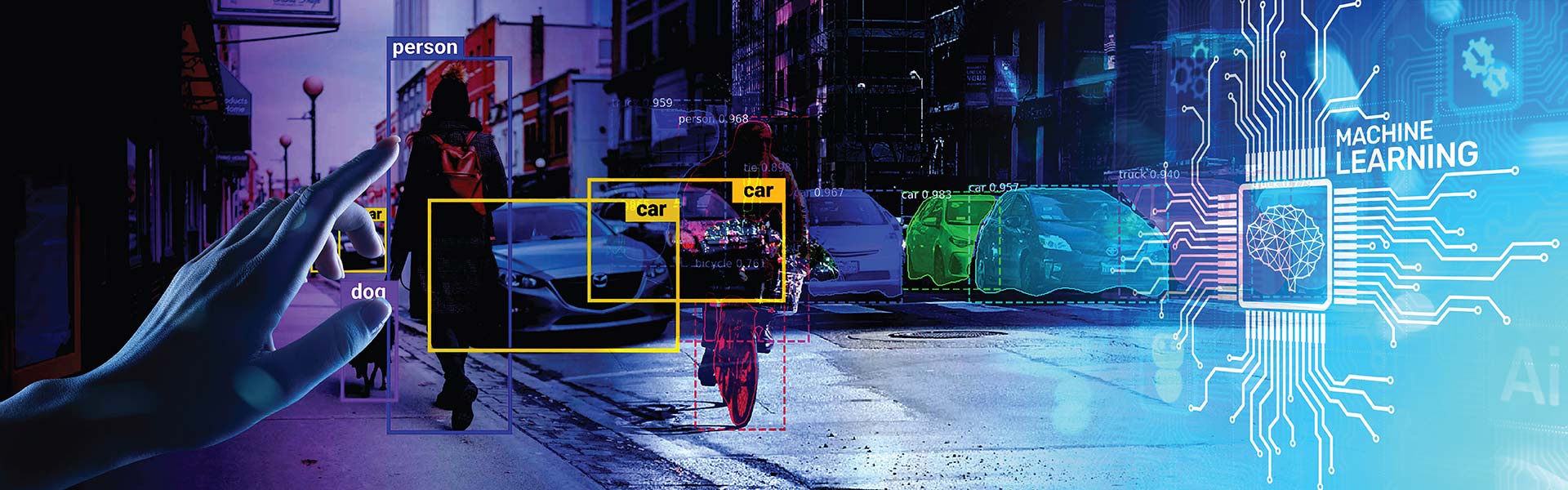

The abilities of a computer vision application depend upon the strength and quality of the annotated images it has for its reference. Naturally, image annotation is the first critical aspect in the development of computer vision, whether for monitoring road traffic, factory production lines, or scanning medical images to detect anomalies.

Contents

- What is computer vision?

- What is image annotation?

- Why image annotation is critical?

- Types of images can be annotated

- Types of image annotation

- 7 image annotation techniques for computer vision

- How are images annotated?

- Regular applications of image annotation across industries

- 7 image annotation best practices

- Image annotation challenges for computer vision

- Manual, synthetic and programmed annotation

- HabileData – The perfect outsourcing partner for image annotation

- Conclusion

Annotated images help computers understand what’s really important in a picture or video. If we want computers to recognize actionable things in a picture, we need to teach them what to look for. This is done through image annotation, by labeling, tagging, and adding metadata to images, so AI systems can analyze new pictures or videos and take decisions on their own.

The performance of computer vision technologies, like facial recognition or self-driving cars, depends on the quality of image annotation in their training data. But the annotation of images can be complex and time consuming if not planned carefully. This is why we created this guide – to make sure everyone on a project understands the key terms, concepts and techniques before starting off to annotate images.

What is computer vision?

Computer vision is a field of artificial intelligence related to image and video analysis. In computer vision, machine learning technologies are used to teach a computer to “see” and extract information from images. These systems comprise photo or video cameras and specialized software that identify and classify objects. They can analyze images (photos, pictures, videos, barcodes), as well as faces and emotions.

What is image annotation?

Image annotation, image labeling, or tagging is the first step in creating computer vision models. It is by studying these labels that a computer can recognize and associate objects to their contexts.

But labeling the enormous volumes of images and videos created every minute is beyond human capacities. So, to manage image annotation, human annotators create the primary datasets first. Then these datasets are used to train MLM and AI models. The AI, once trained, joins in to parse and annotate huge volumes of images quickly and accurately.

Beyond the creation of the primary training datasets, human annotators continue supervising, sampling, and ensuring the output accuracy of AI-based annotation.

Accurate labeling helps a machine learning model improve its understanding of critical factors in the image data. Most of the time, labels are predefined by a machine learning (ML) engineer. The engineer (team) handpicks the labels to help the computer vision model recognize relevant objects in the images and act on that information. So, the quality and accuracy of image annotation of the primary training datasets determine the overall output quality of a machine learning or AI model.

Why image annotation is critical in computer vision

Image annotation, also known as image tagging or image transcribing, is a part of data labeling work. It involves human annotators meticulously tagging or labeling images with metadata information and properties that will empower machines to see, identify and predict objects better.

Accurate image annotation helps computers and devices make informed, intelligent, and ideal decisions. The success of computer vision completely depends on the accuracy of image annotation.

When a child sees a potato for the first time and you say it’s called a tomato, the next time the child sees a potato, it is likely that he/she will label it as a tomato. A machine learning model learns similarly, by looking at examples, and hence the performance of the model depends on the annotated images in the training datasets.

While you can easily show a human child what is a potato, to teach AI or machine learning models the same, experts have to create image annotation examples of potatoes. They have to specify to the computer the visible attributes that help to detect and recognize potatoes.

However, the process doesn’t end there. Because the machine needs to learn how to distinguish a potato from other objects, including similar objects. And it needs to learn about other objects often visible in the work environments in which it will encounter potatoes. You can’t teach it about every other object in the world, but you need to teach it about other objects kept in the store (or other environment) where it will be put to work.

So, AI and ML companies have to annotate a lot many other images to instruct machines what potatoes are ‘not’. Through continuous training, machines learn to detect and identify tomatoes and potatoes seamlessly in accordance with their niche, purpose, and datasets.

People also read: Infographics – Data Labeling for AI Development

Which images can be annotated?

Single-frame and multi-frame images, like videos, can be annotated for machine learning. Videos can be annotated continuously, as a stream, or frame by frame. The most commonly annotated images include:

- 2D images and video (multi-frame) including data from cameras or other imaging technology such as an SLR (single lens reflex) camera or optical microscopes, etc.

- 3D images and video (multi-frame), including data from cameras or other imaging technology, such as electron, ion, or scanning probe microscopes, etc.

Before jumping into effective annotation techniques for computer vision, it is advisable to be aware of the different types of image annotations, so that you pick the right type for your project.

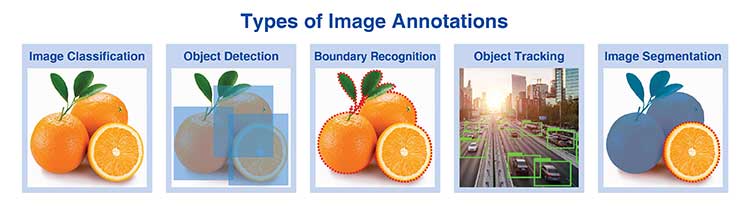

What are the different types of image annotations?

Usually, images contain several elements. You focus on a relevant subject or object and overlook other elements in the picture. However, many a time these ignored objects are required for proper analysis. Or they are removed to keep data bias or data skewing at bay.

Apart from this, machine learning models should know of all the elements present in an image to make decisions like humans do. Identifying other objects is also a part of image annotation. So, there are different tasks and types of work done in image annotation projects including:

- Image classification – The process involves a broad classification of elements like vehicles, buildings, and traffic lights.

- Object detection – More specific annotation for distinguishing between similar objects such as cars and taxis, lanes 1, 2, or more.

- Boundary recognition – This is to add more precise information about an object like its colors, appearance, location, etc. like a yellow taxi on lane 2.

- Object tracking – This is used specifically to identify and label the presence, location, and count of one or more objects in several frames. Also, it is used to track object movements and to study patterns from video footage and surveillance cameras.

- Image segmentation – This is used to divide images into several segments and to assign every pixel specific class or class instance. Segmentation types include:

- Semantic segmentation – Identifying the boundaries between similar objects

- Instance segmentation – Identifying and labeling each object in an image

- Panoptic segmentation – Using semantic segmentation to produce data labeled for background, and instance segmentation to label the objects in the image

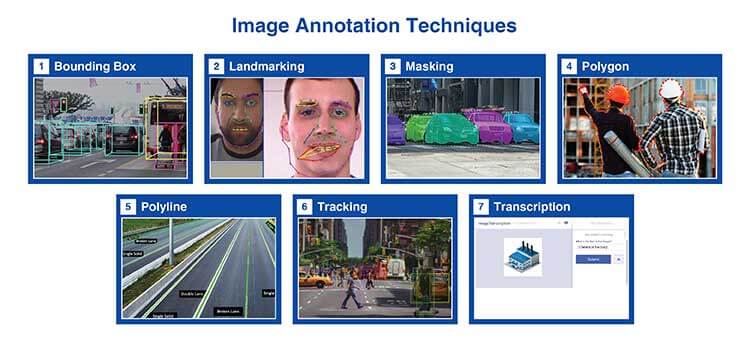

7 image annotation techniques for computer vision

Before going into how different industries use image annotation, it is important to know about the various image annotation techniques used in computer vision.

- Bounding box – These boxes are two-dimensional (2D) or three-dimensional (3D). A 3D bounding box is also called a 3D Cuboid. This annotation technique is mainly used to draw boxes around relatively symmetrical target objects, such as vehicles, pedestrians, and road signs. It is useful when the shape of the object is of less interest or where there is no occlusion.

- Landmarking – This annotation technique helps in plotting characteristics in data, i.e., facial recognition to detect facial features, expressions, and emotions. Use of pose-point annotations helps in annotating body position and alignment. Leveraged particularly for sports analytics, landmarking helps in deciding where a baseball pitcher’s hand, wrist, and elbow are in accordance with one another when the pitcher is throwing the baseball.

- Masking – This particular annotation technique helps in hiding areas in an image and to highlight other areas of interest. Image masking makes it easier to draw attention to the desired area in an image.

- Polygon – Used specifically to highlight highest points or vertices of a target object in an image and annotate its edges. This technique is particularly useful for labeling objects like houses, areas of land, or vegetation, which are more likely to be irregular in shape.

- Polyline – Made of one or more-line segments, it is used to plot continuous lines when working with open shapes, such as road lane markers, sidewalks, or power lines.

- Tracking – This is used to label and plot an object’s movement across multiple frames in a video. It is basically used to fill in the movement and track or interpolate the movement in the interim frames that were annotated.

- Transcription – It helps in annotating text in images or videos when there is multimodal information (i.e., images and text) in the data.

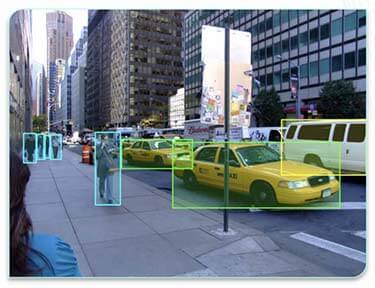

How are images annotated?

A human annotator evaluates a set of images, identifies objects of interest in each image, and annotates them by indicating attributes like its shape, features. Metadata is added to the image in order to annotate or label it. Metadata is a type of data where multiple aspects of a picture are defined with the help of keywords, which are also a type of data.

In the example shown in this image, bounding boxes are placed around the relevant objects to annotate it. The data that will get incorporated into machine-learning algorithms will be:

- Pedestrians marked in blue

- Taxis marked in yellow

- Trucks marked in yellow

The business use case and project requirements define the total number of annotations or labels required on each image. Some projects may warrant a single label to represent the content of an entire image. This is known as image classification. Also, there are projects that require tagging of multiple objects in a single image, known as bounding boxes. Popular image annotation apps usually have features like a bounding box annotation tool and a pen tool for freehand image segmentation.

Regular applications of image annotation across industries

1. Healthcare

For patient diagnosis and treatment, the medical fraternity relies on visual data such as MRI, x-ray scans, radiology images. Labeling medical imaging data helps in training, developing, and optimizing computer vision systems to diagnose diseases.

- To annotate/label human organs in medical x-rays, the Polygon Technique is used as it helps in training the ML model to identify deformities in body parts.

- AI models are trained using thousands of radio images labeled with cancerous and non-cancerous spots until the machine can learn to differentiate on its own.

- Doctors are supplementing their diagnoses today with AI-powered solutions. They help in identifying diseases, improving the accuracy of treatment and diagnosis, reducing costs, and cutting down patient wait times in healthcare.

2. E-Commerce

AI and ML have succeeded in taking the eCommerce industry to the next level in providing better and effective shopping experiences. Image annotation helps in computer vision-based algorithms capable of recognizing products like clothes, shoes, bags, accessories, etc. It is further used to manage and maintain a searchable product database, maintain product catalogs, and provide an enhanced search experience.

3. Retail

Image annotation plays a critical role in building AI models that can search product catalogs to provide results that buyers wish to see. The 2D bounding box annotation technique is used extensively by shopping malls and grocery stores for labeling in-store images of products like shirts, trousers, jackets, persons, etc. For this, they also train their ML models on various attributes such as price, color, design, etc.

Many retailers now are piloting robots in their stores to collect images of shelves to determine if a product has low stock or is out-of-stock, or whether the shelves need reordering. These robots are capable of scanning barcode images to gather product information using image transcription, a method of image annotation.

4. Supply chain

The lines and splines annotation technique is used to label lanes in a warehouse. It is used to identify racks based on product types and their delivery locations. This information helps the robots to optimize their paths (routes) and automate the delivery chain.

5. Manufacturing

Manufacturers use image annotation to capture information on inventories in their warehouses. Sensory image data is used to identify products that are likely to run out of stock. They also label image data of equipment to train computers to identify faults or failures and raise flags for maintenance.

6. Self-driving cars

The potential of autonomous driving, though enormous, rests on the accuracy of image annotation. Accurately annotated images provide training data of the car’s environment to Computer Vision-based machine learning systems. Semantic segmentation is used to annotate pixels on an image and identify objects like road, cars, traffic lights, pole, pedestrians, etc. It helps autonomous vehicles to identify surroundings and sense obstacles.

7. Agriculture

Annotating aerial and satellite imagery for use by AI, helps farmers in estimating crop yields, evaluating soil and other estimations and predictions. There are companies who get camera images annotated to differentiate between crops and weeds at pixel-level. Thus, the data from annotated images guides where to spray pesticides, shows where weeds have grown, and helps save time, money, and materials in farm maintenance.

8. Finance

The industry is still taking baby steps in harnessing the power of image annotation. But prevalent uses are already proving their value. Caixabank uses face recognition to verify the identity of customers withdrawing money from ATMs. The “pose-point” process is used to map facial features like eyes, lips, and mouth for faster determination of identity, and reduction of potential fraud. Image annotation is also used for checking receipts for reimbursement or checks to deposit via a mobile device.

7 best practices for annotating and labeling images

Here are a few best practices that can improve the quality and performance of image annotation projects. You can include them in training and quality review of your annotation teams:

- Label objects in their entirety – Ensuring that bounding boxes or pixel maps cover the entire object of interest is the most important aspect of an image annotation project. At the same time, if the image contains multiple objects, annotating all of them accurately is a must. Missing any object can impair the learning process of the AI model. A computer vision model can be easily confused by what a full object constitutes, if only a portion of an object is labeled.

- Fully label obstructed objects – Not annotating objects because they were partially blocked or are out of view can confuse the computer vision algorithm. Instead, fully labeling occluded objects as if they were in full view, will help. Do not draw bounding boxes on the partially visible part of the object. Do not get baffled if boxes overlap and there is more than one object of interest that appear occluded. Just label them accordingly and accurately.

- Maintain labeling consistency across images – Human annotators should be consistent in their labeling. Subtle differences between features and objects in an image at times may make it difficult. Reviewing edge cases with the person who annotated it will prove helpful to clarify what label should be applied in each edge case.

- Use specific label names – Be exhaustive, but specific, while annotating or labeling objects in images. It is better to be overly specific than leaving something out. This makes your relabeling process easier. For example, while building a milk breed cow detector, including a class for Friesian and Jersey, though the object of interest is milk breed cow, is advisable and beneficial for the project. Realizing the existence of individual milk breed cows too late and then relabeling the entire dataset is wasteful and demotivating.

- Provide clear guidelines – If your human annotators do not agree on any annotation task, it means the guidelines for the task are not clear and effective. It is basic to provide labelers with written guidelines with visual examples showing typical cases and boundary cases. And you should keep adding clarity to the guide as a WIP to accommodate future model improvements. With a good annotation guide, you just need some tweaking to apply it to fresh projects and train annotators for the new job at hand.

- Define annotation tasks specifically – In order to have more chances of interpretation and disagreement, and thus improvements, define data annotation tasks very specifically. Take into account the expertise and knowledge of your human annotators, and of course, the impact your specifications will have on the annotation project.

- Test reliability at a small scale – Always train a sample model to check for issues, once the team has annotated a small group of images. This early checking can help discover problems in the annotations or label scheme and resolve them early.

Challenges in image annotation for computer vision

Here are some common image annotation challenges faced by companies that build AI and ML models:

-

Human/AI when to use which – Human annotation and automated annotation are the two widely used data or image annotation methods used. Human annotation is slower, costly and involves training of human annotators – but promises highly accurate project-specific results. Automated image annotation with ready generic guides and rules proves cost-effective but does not assure the level of accuracy a specific project may need. With smaller projects, human annotation can be a choice, but with larger projects the costs may be too high.

Current practice with AI models is to use human annotators for creating the primary datasets and then use humans for supervision, adjustments and quality checking, while the AI does the bulk of the job. But choosing which to use when remains a challenge that requires strategic solutions.

-

Guaranteeing consistent data – Machine learning models need consistent and high-quality training data in order to make accurate and timely predictions. On the other hand, beliefs, culture, and personal biases of human image annotators are capable of impacting the consistency of the data flow, resulting in skewed machine learning models. AI and ML companies struggle to include counter measures for these biases in the training sessions for human image annotators.

-

Choosing a suitable annotation tool – The increasing demand of image annotation for creating training datasets has given rise to a lot of image annotation platforms and tools, each providing different capabilities for different types of annotations. However, these tools have their own challenges and learning curves and the abundance of choice makes it difficult for AI and ML companies to choose one that best fits their use case.

Manual, synthetic and programmed annotation

Deep learning methods typically require vast amounts of training data to reach their full potential. The training of such models with millions of parameters requires a massive amount of labeled training data to provide state-of-the-art results. Clearly, the creation of such massive datasets has become one of the main limitations of these approaches: they require human input, are very costly, time consuming and error prone.

Synthetic image annotation

Synthetic labeling is the creation or generation of new data that contains the attributes necessary to train your AI or ML model. One way to perform synthetic labeling is through generative adversarial networks. Two neural networks (a generator and a discriminator) are used for creating fake data and distinguish between real and fake data respectively. This results in highly realistic new data, and also allows you to create all-new data from pre-existing datasets. This makes synthetic image annotation a high quality and time-saving option.

Training with synthetic data is very attractive because it decreases the burden of data or image annotation. Synthetic annotation enables generating an infinite amount of training images with large variations. In addition, training with synthetic samples allows control of the rendering process of the images and thus the properties of the dataset.

However, apart from the need for large amounts of computing power, the main challenge of this approach is how to bridge the so-called “domain gap” between synthesized and real images. It is also observed that models trained on synthetic data perform poorly against real-life data.

Programmed annotation

Programmatic data labeling is the process of using scripts to automatically label data. This process can automate tasks including image and text annotation, which eliminates the need for large numbers of human labelers. A computer program also does not need rest, so you can expect results much faster than when working with humans.

However, this approach is still far from perfect. Programmatic data labeling is therefore often combined with a dedicated quality assurance team. This team reviews the dataset as it is being labeled.

Manual image annotation

Humans can identify new objects and their distinct attributes easily. Also, manual image annotation can fulfil any additional demands for customization needed by a project. The hallmarks of automation are scalability and consistency, while that of human annotation are flexibility and problem solving.

People also read: Infographics – Manual vs. Automatic Image Annotation

HabileData – The perfect outsourcing partner for image annotation services

Training, validation and testing of your computer vision algorithms with accurately annotated training data determines the success of your AI model. For your AI algorithm to recognize objects and make decisions like humans, each image in the training data should be precisely labeled by experts.

HabileData is a three-decade old data, video and image annotation company providing a suite of image annotation and labeling services. It can help fulfil all your annotation requirements and help you scale your AI and ML initiatives. With Human-in-the-loop as an integral part of the image annotation process, HabileData leverages a highly skilled human workforce for annotation, irrespective of the size and complexity of the project. Annotation professionals at HabileData work as your extended in-house team ensuring a collaborative workflow for your machine learning image labeling projects. Their image labeling service costs are also reasonable when you compare both quality and quantity against the costs.

Conclusion

Image annotation is an extremely critical operation given the nature of computer vision applications. Computer vision is mostly used in highly sensitive areas ranging from healthcare to worker safety and surveillance. False positives and errors can sometimes snowball into catastrophes. This is why, both a highly skilled team of experts and accurately trained AI models lie at the core of successful image annotation for computer vision applications. And you can’t do one without the other, especially in large image annotation projects.

Connect with our team of professionals today to get your computers to make better informed and intelligent decisions.

Get in Touch with our Experts »

HabileData is a global provider of data management and business process outsourcing solutions, empowering enterprises with over 25 years of industry expertise. Alongside our core offerings - data processing, digitization, and document management - we’re at the forefront of AI enablement services. We support machine learning initiatives through high-quality data annotation, image labeling, and data aggregation, ensuring AI models are trained with precision and scale. From real estate and ITES to retail and Ecommerce, our content reflects real-world knowledge gained from delivering scalable, human-in-the-loop data services to clients worldwide.