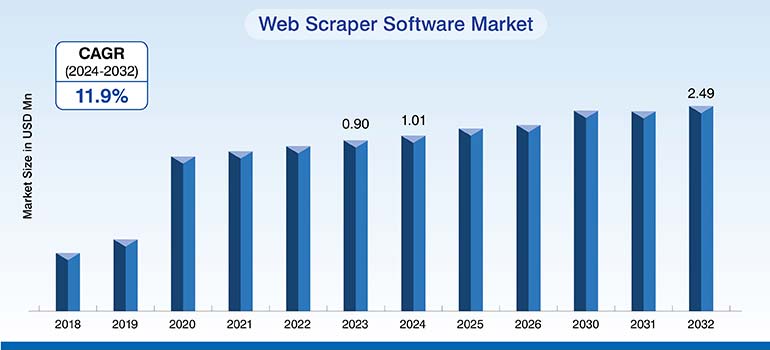

The growth of web scraping industry highlights the importance of automated data collection tools that help businesses collect data from websites for strategic decision making, market research, lead generation and competitor analysis.

Contents

Data plays a very important role in strategic decision making and steering companies ahead in industries. Web scraping tools allow automated method of extracting data from different websites for several purposes such as price tracking, competitor monitoring, and customer sentiment analysis. This process provides businesses the insights and information to make smart decisions. This demand for accurate data scraping is growing at a rapid rate.

The Web Scraper Software Market is projected to grow from USD 1.01 Billion in 2024 to USD 2.49 Billion by 2032 in the forecasted period.

With websites becoming more dynamic and employing new ways against bots, older scraping methods do not seem to be delivering results. Modern scraping now calls for smarter tools that can handle JavaScript pages, bypass detection, manage large jobs, and extract structured website data.

Choosing the best web scraping tool is not just a technical choice. It can influence factors such as how projects work, how to scale them, and how safely you operate.

How to choose the right web scraping tool for your data needs

Here are six key things to think about:

- Technical expertise It is important to know your team’s technical background as some web scraping tools require knowledge in coding.

- Scalability Look for tools that can support scalability and growth with the ability to handle large volumes of data.

- Anti bot protection Check features such as rotating proxies and CAPTCHA to bypass barriers.

- Data export options Ensure the tool can deliver data in formats like CSV, Excel, or JSON.

- Cost and licensing fees Always check what the license includes such as team access and data limits. Check tools support and paid/free features.

- Legal compliance Ensure tools follow all privacy regulations like GDPR and CCPA, as well as avoid handling personal information. Also, check terms of service.

Ready to Simplify Data Extraction? Discover the top web scraping tools tailored to your business needs.

Contact Us »10 best web scraping tools for scalable and accurate data extraction

1. Scrapingdog

Whether it is search engines, social media or e-commerce, Scrapingdog is a web scraping API to extract data from different types of websites.

Scrapingdog’s web scraping API can be used for several tasks such as Price Monitoring, SEO Monitoring, Lead Generation, and Product Data Extraction among others.

It removes problems of dealing with proxies, CAPTCHAs or blocks.

2. ScraperAPI

Designed for scalability, ScraperAPI scrapes any website, irrespective of the scale or difficulty. This tool provides localized data with geotargeting, providing access to more than 40M proxies in 50+ countries, eliminating the risk of getting blocked again.

ScraperAPI effectively handles proxy rotation, CAPTCHA and browser handling, and structuring data. It is not just about scalability, you get record-fast results for the toughest websites to scrape.

ScraperAPI can handle millions of requests asynchronously, and provides a dedicated account manager and live support.

3. Octoparse

Octoparse is a no-coding solution for web scraping to change pages into structured data just in a few clicks. It offers the option to build reliable web scrapers without coding and design a scraper in a workflow designer for easy visualization in a browser. Octoparse offers both a desktop application and cloud-based platform with its intuitive visual interface.

With hundreds of preset templates for popular websites, Octoparse offers data instantly with zero setup making it one of the best no-code scrapers for both teams, as well as individuals.

It lets you stay ahead of web scraping challenges with IP rotation, CAPTCHA solving and proxies.

4. Apify

Apify is not just any other web scraping API. It is a full stack platform for web scraping and provides developers the largest ecosystem to build, deploy, and publish web scrapers, AI agents, and automation tools commonly referred to as Actors.

Whether it is integration, proxy or storage, Actors have all the features that get you covered. Basically, Actors automate tasks such as web scraping and can scale from simple tasks to complex workflows.

Apify contains a library of 6,000+ pre built Actors spanning across diverse categories ranging from AI, Automation, Developer tools, E commerce, Lead generation, MCP servers, Real estate, SEO tools to social media among others.

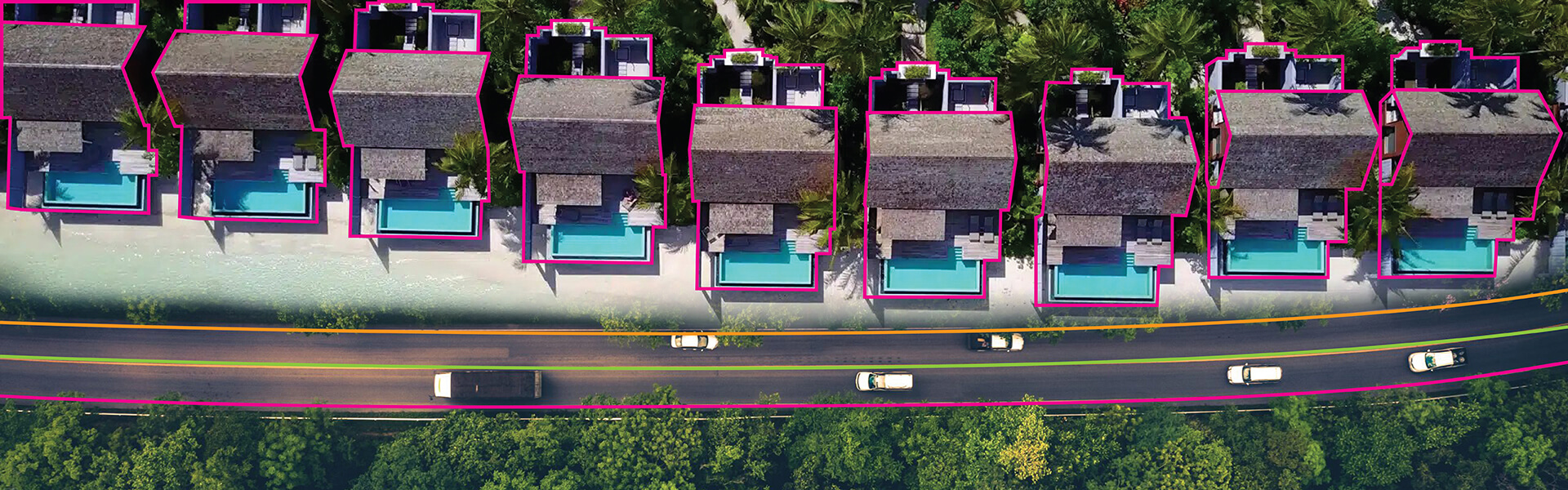

Case Study

A US-based real estate publisher outsourced their web scraping for collecting property data to HabileData. Find out how they leveraged web scraping tools and created a structured workflow to scrape, cleanse, and format real estate data to help their client.

5. Zyte

Zyte is an all-inclusive tool for unblocking, browser rendering and extraction. Zyte’s automated proxy rotation selects the leanest set of proxies as well as browser rendering, CAPTCHA bypass.

It provides a Web Scraping API that handles proxy rotation, Zyte also ensures compliance, offering features that help businesses collect data responsibly under GDPR and CCPA guidelines.

6. Oxylabs

When it comes to pulling in a lot of data from websites for market research or just for competitors’ analysis, Oxylabs is one of those names that gets mentioned a lot.

And honestly, it’s not hard to see why. Oxylabs has strong residential and datacenter proxy services.

What really makes them stay ahead of the curve is not merely technology. It’s a fact that they have tools that work in real time for large scraping projects.

And if you’ve ever been stuck in a challenging project, you’ll appreciate this: they actually assign you an account manager to help. That kind of hands-on support isn’t something you find everywhere.

7. Diffbot

Diffbot can extract content from websites automatically just like humans.

What sets Diffbot apart from web scraping tools is its ability to read content on a page without any rules. Based on computer vision, Diffbot classifies pages into a possible type out of 20 pre-defined categories.

Once the classification is done, content is interpreted by a machine learning model. This model is trained to identify the key attributes based on the initial category of the page.

As a result, the website is converted into clean structured data which is ready for your application. Diffbot’s knowledge graph contains nearly 10 billion linked datasets of companies, products, articles, and discussions.

8. ParseHub

If you have ever struggled to pull data from websites that use JavaScript or AJAX, ParseHub is honestly worth checking out. It’s designed to deal with exactly those kinds of sites.

ParseHub is a web scraping tool that automatically extracts millions of data points from any website.

With an easy to use, browser-based graphic interface, ParseHub can scrape data from dynamic websites with the option to collect millions of data points in just a few minutes with a simple click.

You don’t have to write a single line of code. Its interface is intuitive and you just click on the parts of the page you want, and it figures out the rest for you.

Another useful thing is its REST API.

You can grab your scraped data in Excel or JSON formats, and if you like working with tools like Google Sheets or Tableau, you can easily bring your data right into those platforms too.

9. Import.io

Import.io is an E-Commerce Web Data Extraction that specializes in extracting protected, high value web data. Whether you need hard to get inventory data or extract ratings of products, it offers precise and reliable data, which is critical to get accurate consumer insights and understand sentiments.

Import.io allows you to explore different Ecommerce data types such as Product Details, Reviews, Product Rankings, Product Q&A and Availability.

It avoids roadblocks such as CAPTCHAs and logins with AI and interaction mode to crawl modern sites.

10. SERPAPI

Known for providing data scraping for SEO in real time, SERPApi is one of the finest Google Search API.

SERPApi collects SEO data such as keyword rankings, backlinks, ads, and metadata from over 50 search engines.

SERPApi provides speed, accuracy, and legal compliance, offering built-in proxy rotation, location targeting, and bulk search support.

Top web scraping tools compared: Features, strengths, and best use cases

This comparative table highlights leading web scraping tools, their key features, unique strengths, and best-fit use cases. It will enable you to quickly identify the most suitable option based on scalability, usability, and pricing orientation.

| Tool | Key Features | Unique Strengths / Use Cases |

|---|---|---|

| Scrapingdog |

Web scraping API for multiple sites (search engines, social, eCommerce). Handles proxies, CAPTCHAs, blocks |

Ideal for Price Monitoring, SEO Monitoring, Lead Generation, Product Data Extraction without infrastructure headaches. |

| ScraperAPI |

40M+ proxies across 50+ countries, handles proxy rotation, CAPTCHA, browser automation. Can scrape at scale asynchronously. |

Localized data (geotargeting) + enterprise-grade support with account manager & live help. |

| Octoparse |

No-code scraping tool with desktop & cloud platforms, workflow designer, hundreds of templates. |

Best for non-developers – quick setup with zero coding, intuitive visual interface, preset templates. |

| Apify |

Full-stack scraping & automation platform with 6,000+ pre-built “Actors” (bots). Supports storage, integration, scaling. |

Go-to platform for building + publishing scrapers, automation tools, AI agents at any scale. |

| Zyte |

API for unblocking, proxy rotation, browser rendering, CAPTCHA bypass. GDPR & CCPA compliant. |

Best for responsible/legal scraping, compliance-driven data extraction. |

| Oxylabs |

Strong residential & datacenter proxy services. Real-time scraping tools. |

Tailored for large-scale projects & market research, with dedicated account manager support. |

| Diffbot |

Uses computer vision + ML to classify pages (20+ categories). Provides structured data + massive Knowledge Graph (10B+ datasets). |

Unique in rule-free AI-driven extraction + enterprise Knowledge Graph |

| ParseHub |

Handles JavaScript/AJAX sites. Easy click-based interface, REST API, exports to Excel/JSON. |

Excellent for dynamic sites & easy integration with Google Sheets, Tableau. |

| Import.io |

Specialized in eCommerce scraping (products, reviews, Q&A, rankings, availability). Uses AI to bypass CAPTCHAs/logins. |

Focused on high-value protected eCommerce data for consumer insights & sentiment analysis. |

| SERPAPI |

Google Search API for real-time SEO data (keywords, ads, backlinks, metadata). Built-in proxy rotation, location targeting. |

Specialized in SEO monitoring & SERP data from 50+ search engines, legal compliance ensured. |

This comparison simplifies tool selection by mapping features to business needs. Choose the right scraper confidently, balancing ease of use, scalability, compliance, and budget fit.

Common drawbacks of using web scraping tools

Web scraping tools might look like an easy way to gather data. But there are several challenges as well for businesses.

Many tools can’t handle tricky sites and issues such as blocking bots with CAPTCHAs and IP checks.

If you do not have the right tech skills, overcoming these roadblocks isn’t easy. Also, websites change frequently and scraping tools stop working or fetch the wrong data.

There are legal issues which can put your business at risk.

This is where web scraping companies come in. They take care of legal checks, maintenance, and ensure the data is clean.

You don’t have to worry about the tech side. You just get the data that’s ready for work.

Why should you hire a web scraping company?

Hiring a web scraping company offers a strategic advantage. It gives you access to specialized expertise and advanced technology. These firms navigate complex website structures, manage proxy networks, and handle anti-scraping measures, ensuring reliable and uninterrupted data extraction.

Working with an experienced web scraping service provider helps you minimize risks and maximize the value of your data initiatives.

Key advantages include:

- Custom-Built Scrapers

- Fully Managed Infrastructure

- Proactive Error Handling & Maintenance

- Higher Data Accuracy & Depth

- Legal Compliance

- Seamless Integration

- Scalable & Cost-Effective

Conclusion

Before you choose a tool or service, it is important to understand your business needs. It is equally important to assess factors such as ease of use, learning curve, costs and terms of use.

No matter what tool you use, always scrape data ethically and follow the rules and respect privacy.

If you require voluminous data, it is important to partner with a web scraping company. A specialist company can handle complex scenarios, as well as provide expert resources to ensure your data stays clean, accurate and legal.

Want to Automate Data Collection? Learn about powerful web scraping tools for faster insights and smarter decisions.

Get in Touch »

HabileData is a global provider of data management and business process outsourcing solutions, empowering enterprises with over 25 years of industry expertise. Alongside our core offerings - data processing, digitization, and document management - we’re at the forefront of AI enablement services. We support machine learning initiatives through high-quality data annotation, image labeling, and data aggregation, ensuring AI models are trained with precision and scale. From real estate and ITES to retail and Ecommerce, our content reflects real-world knowledge gained from delivering scalable, human-in-the-loop data services to clients worldwide.