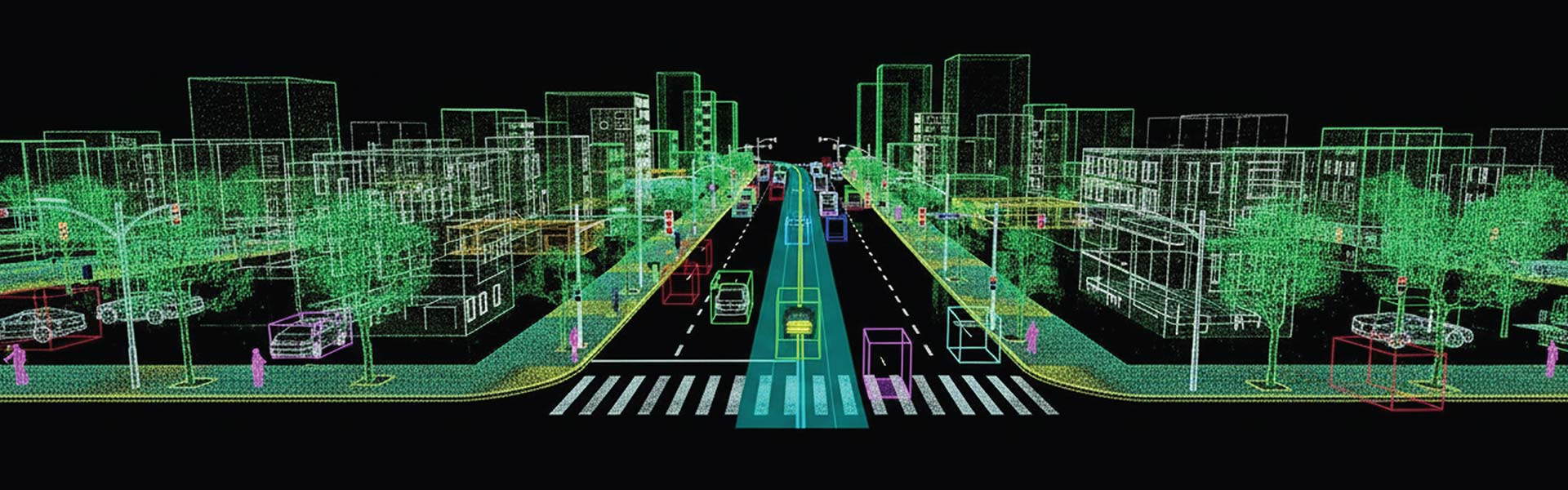

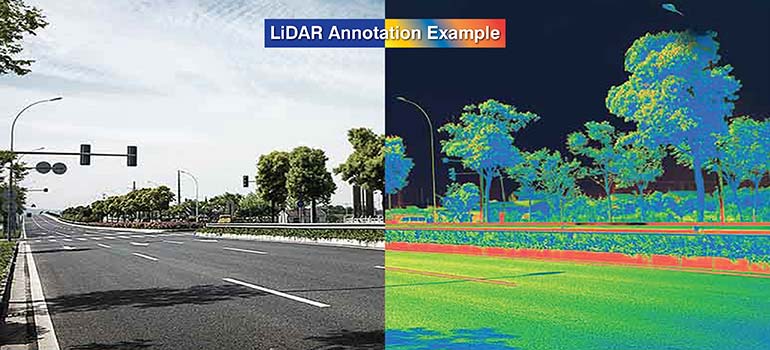

LiDAR systems generate detailed 3D point clouds that, when precisely annotated, enable AI and ML models to interpret real-world environments for autonomous vehicles, robotics, and smart infrastructure with high accuracy.

Contents

- What is LiDAR point cloud data

- Why annotate LiDAR point cloud data

- Applications of annotated LiDAR data in real-world ML models

- Why annotating LiDAR data is complex yet crucial

- How to annotate LiDAR point cloud data

- Best practices for high-quality LiDAR annotations

- Future of LiDAR annotation

- Conclusion

LiDAR (Light Detection and Ranging) systems have become essential for collecting and creating training data for computer vision models. LiDAR systems send out laser pulses and time how long they take to bounce back. What we receive is a collection of spatial coordinates that map out real-world geometry in high detail. These data collections are called point clouds (actually data point clouds) and they are annotated and fed into AI and machine learning systems that run autonomous vehicles, robotics, and smart infrastructure.

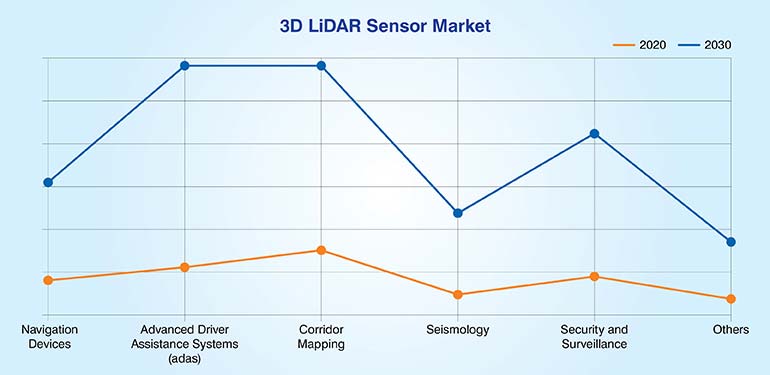

The global 3D LiDAR sensor industry generated $0.51 billion in 2020, and is anticipated to generate $2.30 billion by 2030, witnessing a CAGR of 14.5% from 2021 to 2030. Developments in industrial automation and increase in demand for autonomous vehicle technology is responsible for this spike.

Raw point clouds, however, don’t convey much meaning to a machine on their own. They need to be annotated, or labeled with 3D bounding boxes, semantic segmentation, object classifications and other methods that align with a project’s goals. Once the data is properly annotated AI models can make actual sense of a scene and its objects and respond accordingly.

In this guide we will walk through how we annotate LiDAR point cloud data. We’ll cover the types of annotations, the tools we use, how the workflow goes, and some practical tips that will help build computer vision training datasets.

Want to optimize your LiDAR datasets with our 3D point cloud annotation services.

Contact our experts »What is LiDAR point cloud data

LiDAR point cloud data is basically a 3D snapshot of the physical world, captured using laser-based sensors. Each point in that cloud represents one laser reflection and carries spatial coordinates (X, Y, Z). Metadata is also returned with each point providing information on details like intensity, return number and timestamp.

However, the quality of the point cloud depends a lot on the LiDAR setup, the location and positioning of the sensors, and features of the environment. Before annotators start labeling the data, they must clean it by removing outliers, fixing any distortions or noise and making sure that the frames are properly aligned and oriented.

LiDAR annotation is crucial in domains where applications depend on precise spatial awareness and object detection.

- Autonomous vehicles and ADAS systems fully depend on labeled point clouds to learn how to navigate and avoid obstacles.

- SLAM systems use this annotated data to map out their environments.

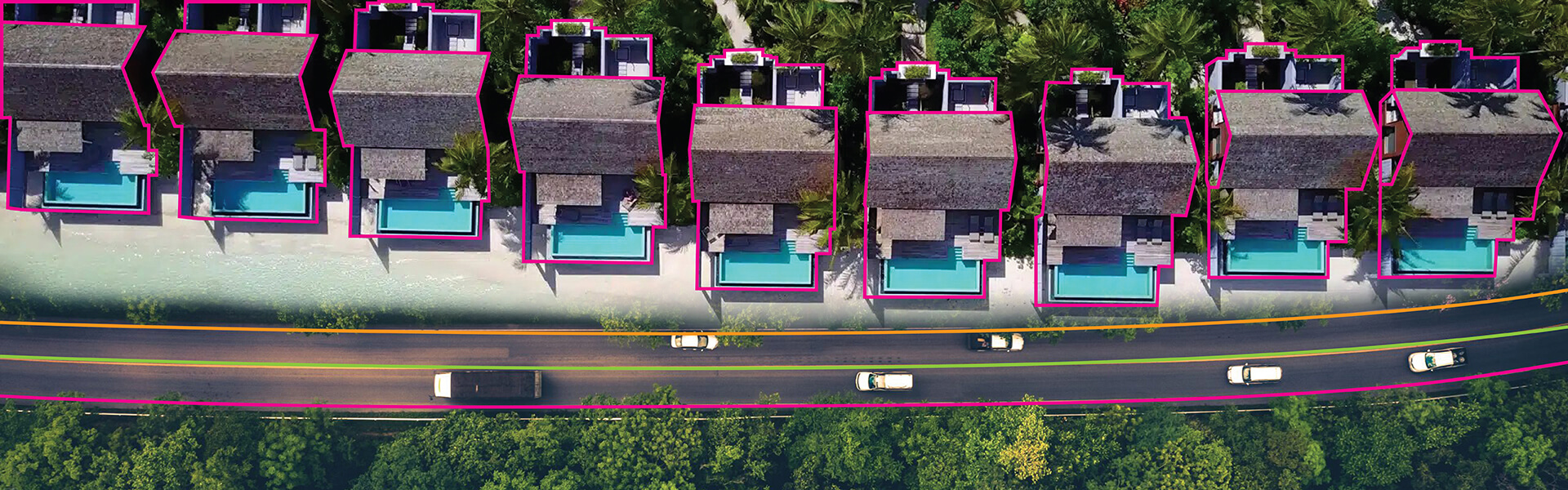

- Urban planning, infrastructure projects and automated logistics all of these rely on labeled 3D data for tracking assets, managing traffic and for understanding scenes.

The purpose of good annotations is to make sure that machine learning models actually are able to understand the raw data captured by sensor readings.

Why annotate LiDAR point cloud data

If you’re going to use LiDAR in the field, your process must begin with ensuring proper annotation. Getting the labels right speeds up how quickly you can develop AI systems that need to understand and interact with the 3D world. Speaking from a technical standpoint, here is why LiDAR annotation matters:

- 3D perception in autonomous systems: Autonomous navigation systems need to be able to perceive exactly where things are in three dimension. They must be able to detect relevant objects, track them and figure out what to do. Good LiDAR data gives autonomous systems real-time awareness and helps them to operate safely under changing conditions.

- Training data for object detection and segmentation models: Different annotation techniques like 3D bounding boxes and semantic segmentations help develop training data for AI to recognize and differentiate objects. However these regular operations can become critical if you have complicated point cloud datasets.

- Sensor fusion and multimodal learning: It is common to combine LiDAR with camera, radar, and GPS data to create a more holistic understanding of a scene, and its parameters and idiosyncrasies. The models thus learn from the data of multiple sources at once. This helps them handle different lighting conditions and environmental variables better.

- Scene understanding and spatial mapping: For applications like HD map generation, path planning or semantic localization, the LiDAR annotation must interpret road layouts, spot obstacles and identify navigable space.

- Benchmarking and evaluation: Having consistently annotated datasets provides you with the ground truth you need for testing models. It gives you the ability to reliably compare how different algorithms and architectures perform under real-world stresses and conditions.

Applications of annotated LiDAR data in real-world ML models

Annotated LiDAR data is what you need for training and validating machine learning models that work in 3D space. The annotations turn unstructured scans into something the models can interpret and use to make decisions. As AI gets more complex the quality of your LiDAR annotations becomes more important especially when safety is on the line.

Looking to enhance your AI models with consistent, scalable 3D annotations.

Contact us now »Why annotating LiDAR data is complex yet crucial

| Challenge | Explanation |

|---|---|

| Point Sparsity and Density Variations |

|

| Occlusions and Overlapping Objects |

|

| Lack of Texture and Color Information |

|

| Complex 3D Navigation and Annotation Tools |

|

| Annotation Time and Labor Intensity |

|

| Human Error and Fatigue |

|

| Alignment in Multi-frame and Sensor Fusion Contexts |

|

How to annotate LiDAR point cloud data

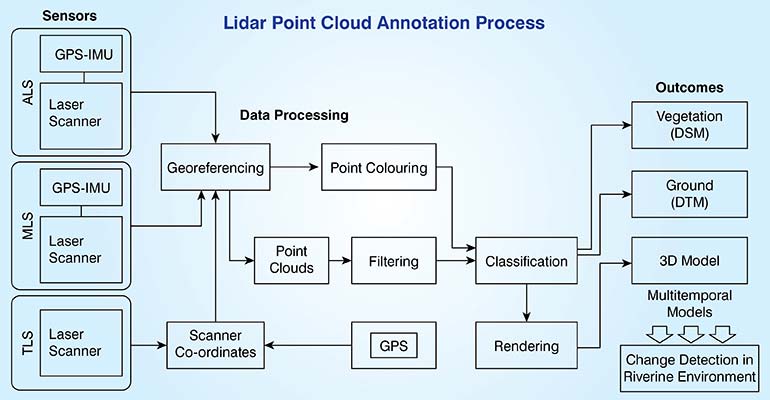

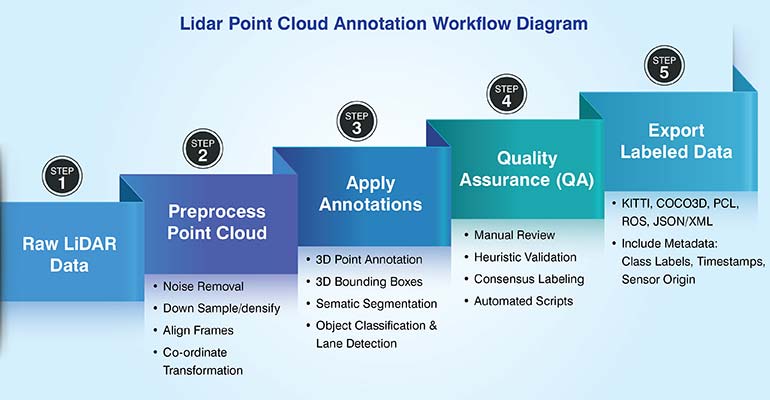

The core work in annotating LiDAR point cloud data involves labeling the 3D points captured by LiDAR sensors. This is what helps to digitally reconstruct the target objects, surfaces or features. The entire point cloud annotation process begins with the import of the data, usually in LAS or PCD format, into the annotation tools or software.

Depending on the project, the annotators may use 3D bounding boxes, semantic segmentations or polylines to define target objects like vehicles, pedestrians, roads or buildings, trees, or any element present in a scene that needs to be recorded and recognized. Before the annotated data is delivered, it passes through a series of quality control steps including automated validation and multiple reviews to ensure the high accuracy needed by machine learning models.

Having clear annotation guidelines and labelling things consistently is what makes this work. Here’s roughly how the LiDAR annotation process goes:

1. Preprocess the raw LiDAR data

Before you annotate anything, you need to preprocess the point clouds. Annotators get down to cleaning up noise, aligning the frames and converting coordinates to a standard format. This makes the dataset ready for annotation and helps with overall data quality and consistency.

- Remove noise: You need to filter out stray reflections and outliers, confusing data that generates from laser beams bouncing off reflective surfaces or particles in the air. Cleaning noise gives you the actual spatial data you need.

- Downsample or densify: Point density is never uniform across scans. Sometimes you have to downsample so you can process large datasets. At other times annotators need to densify sparse areas to build the detail needed for accurate labelling.

- Align frames: When you’re working with sequences of LiDAR frames, getting the timing right between frames is absolutely important. Proper synchronization is needed for consistent object tracking and for maintaining spatial continuity across all annotations.

- Coordinate transformation: Point clouds arrive with sensor-based coordinates. Annotators have to reorient the coordinates and registrations to a global or map-based reference frame. This is a crucial step because in most real life cases we have to combine data from different sources and work with geospatial mapping.

2. Choose the right annotation tool

Choosing the right annotation tool affects how accurate, efficient and scalable your work will be across complex 3D datasets. Look for tools that handle visualization well, give you flexibility in how you label things, let you navigate through time-sequenced data and integrate different sensor inputs for a complete workflow.

- 3D point cloud visualization (pan, zoom, rotate): The annotation tool must allow you to see the point cloud clearly so you can move around it, check object geometry from different angles and verify your annotations are spatially correct.

- Semantic labels, 3D bounding boxes and segmentation: A good tool in this case should handle all the different annotation types. Must have semantic labeling, instance segmentation, 3D bounding boxes and others so annotators can properly capture object level and scene level information.

- Multi-frame navigation for temporal consistency: The tool should help annotators to move smoothly through sequential LiDAR frames. Without this ability it is difficult to maintain accuracy in object tracking or temporal coherence across real world environments.

- Frame stacking or sensor fusion (LiDAR + camera): Adding RGB images or radar data by stacking frames provides better depth perception and classification accuracy as more context is added to the 3D annotations.

Before starting annotation, selecting the right platform is essential. Popular tools include the following industry-preferred solutions:

| Tool | Key Features | Ideal Use Cases |

|---|---|---|

| Labelbox 3D |

|

|

| SuperAnnotate |

|

|

| Scale AI |

|

|

| Label Fusion (Open Source) |

|

|

| 3D Slicer (Open Source) |

|

|

Not to forget that choosing the right annotation tool ensures consistent accuracy, efficient workflows, and reliable training data essential for high-performance AI perception models.

3. Select the appropriate annotation type

Selecting and applying the appropriate annotation types is crucial for accurately labeling LiDAR point cloud data, ensuring precise object detection and semantic segmentation results.

Choosing the right annotation method for specific tasks enhances data quality, improves model training accuracy, and supports effective real-world applications across diverse LiDAR use cases.

Best practices for high-quality LiDAR annotations

We require extremely high-fidelity perception of the 3D space in production-grade autonomous driving and robotics. This 3D space perception, and thus the AI model’s performance, fundamentally depends on the quality of the LiDAR point cloud annotation. To achieve that annotation quality and dataset precision, we use some best practices and thumb rules, which guarantee deep learning models are leveraged to their fullest extent. Let’s check out the top 6 best practices for accurate Lidar annotations.

- Use unambiguous annotation guidelines and taxonomy: Always standardize object definitions, attributes and classification rules before starting annotation. This primary focus on taxonomy clarity ensures consistent labeling across all regions of complex point clouds.

- Use hybrid teams (technical annotators + domain experts): Ensure technical annotators have access to certified domain experts (e.g. automotive engineers). This makes sure that labeling decisions are valid both technically and contextually.

- Leverage 2D image alignment to improve annotation accuracy: Project 2D RGB pixels onto the 3D point cloud data to improve boundary accuracy and far-field object classification.

- Prioritize active QA loops and real-time feedback: Implement a tiered real-time auditing system with embedded QA checks to speed up corrective action, minimize annotation drift and ensure SLA compliance.

- Retrain pre-labeling models with human corrected data: Feed human corrected gold standard data back into your AI pre-labeling models. This iterative process fine tunes the AI assistance and boosts throughput and dataset consistency.

- Hire LiDAR point cloud annotation experts: Specialized LiDAR annotation experts can do spatial reasoning and annotation skills as also knowledge of tools. They know how to handle complex occlusion and can track low visibility far-field objects accurately.

These 6 practices will help you turn raw point cloud data into well annotated training dataset. When you ensure this high level of annotation accuracy your deep learning models are able to gain the 3D perception they need to work in the field.

Partner with specialists to accelerate precise LiDAR annotation for AI models.

Call us now »Future of LiDAR annotation

The next phase of LiDAR annotation is evolving fast with automation, multimodal data integration and the need for scalable high accuracy training datasets.

- AI Assisted Annotation Tools: AI driven platforms now use pre-trained deep learning models for auto-labeling and active learning. These tools speed up annotation cycles, suggest probable labels and improve human in the loop efficiency while maintaining accuracy. Over time continuous retraining helps the system adapt to new object classes and environmental changes.

- Sensor Fusion in Annotation: Combining LiDAR with RGB, radar or thermal imagery adds context and depth accuracy. Fusion based annotation provides richer 3D perception, better occlusion handling and refines object boundaries making it essential for AV, robotics and defense applications.

- Standardization & Benchmarks: Common labeling formats like KITTI, nuScenes and Waymo Open are setting the industry standard. These benchmarks enable interoperability, model evaluation and dataset sharing across the AI ecosystem.

- Synthetic Data: Synthetic point clouds from simulations can augment real world datasets. They improve model robustness in rare or unsafe conditions and reduce manual annotation requirements.

As LiDAR annotation matures, its future lies in hybrid pipelines, merging automation, sensor fusion, and standardized workflows that deliver richer, faster, and more reliable 3D datasets.

Conclusion

Accurate 3D annotation remains the backbone of reliable AI and ML model performance, directly influencing perception accuracy, scene understanding, and decision-making in real-world systems. As LiDAR-based applications expand across autonomous driving, robotics, and geospatial intelligence, the precision and consistency of annotations determine model robustness and generalizability.

Discover how professional LiDAR annotations boost performance of your AI systems.

Consult us today »

Snehal Joshi heads the business process management vertical at HabileData, the company offering quality data processing services to companies worldwide. He has successfully built, deployed and managed more than 40 data processing management, research and analysis and image intelligence solutions in the last 20 years. Snehal leverages innovation, smart tooling and digitalization across functions and domains to empower organizations to unlock the potential of their business data.