Retraining AI models to fit real world business workflows is a difficult proposition for ecommerce, finance, customer support and healthcare industries. Prompt engineering helps to get desired outputs by leveraging prompt tuning and prompt optimization, so the AI response gets more focused and reliable.

Contents

- Understanding prompt engineering

- Different types of prompts: Key examples and applications

- The business value of prompt engineering for AI/ML companies

- 5 best practices and tips for effective prompt engineering

- Top 5 prompt engineering tools and frameworks

- Common prompt engineering pitfalls and how to avoid them

- Real-world examples of prompt engineering in action

- Future of prompt engineering

- Conclusion

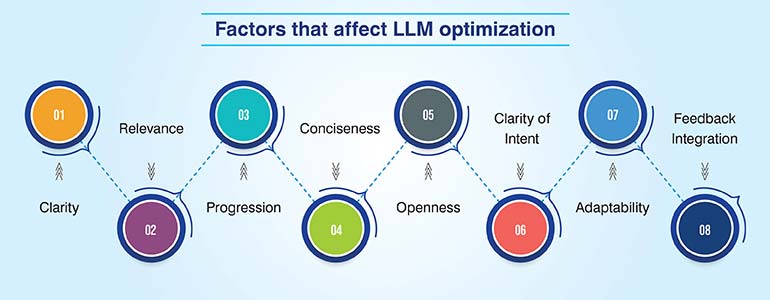

Prompt engineering is based on this simple truth that the accuracy of AI responses depend largely on how you frame your queries to the AI model, and every AI model has its own quirks. Optimized prompts directly enhance model accuracy by guiding LLMs toward contextually relevant, factual responses. They minimize hallucinations that derail AI reliability and ensure consistent output quality. As a result, businesses achieve faster task automation, streamlined workflows, and improved productivity.

However, according to McKinsey 2024 AI Report; over 80% of generative AI use cases fail to deliver expected ROI due to poorly designed prompts and unclear objectives. So, in such cases retraining the models is not feasible. Instead revising and reconstructing the prompts to yield optimized results proves to be a smarter move.

In this guide we will explain core prompt engineering principles, tools, and techniques with practical examples to effectively save time in summarization, data extraction, analysis, and effective prompt tuning.

Understanding prompt engineering

So now let’s understand what prompt engineering is, why it matters for AI/ML companies, and how it enhances model reliability, response quality, and seamless adaptation.

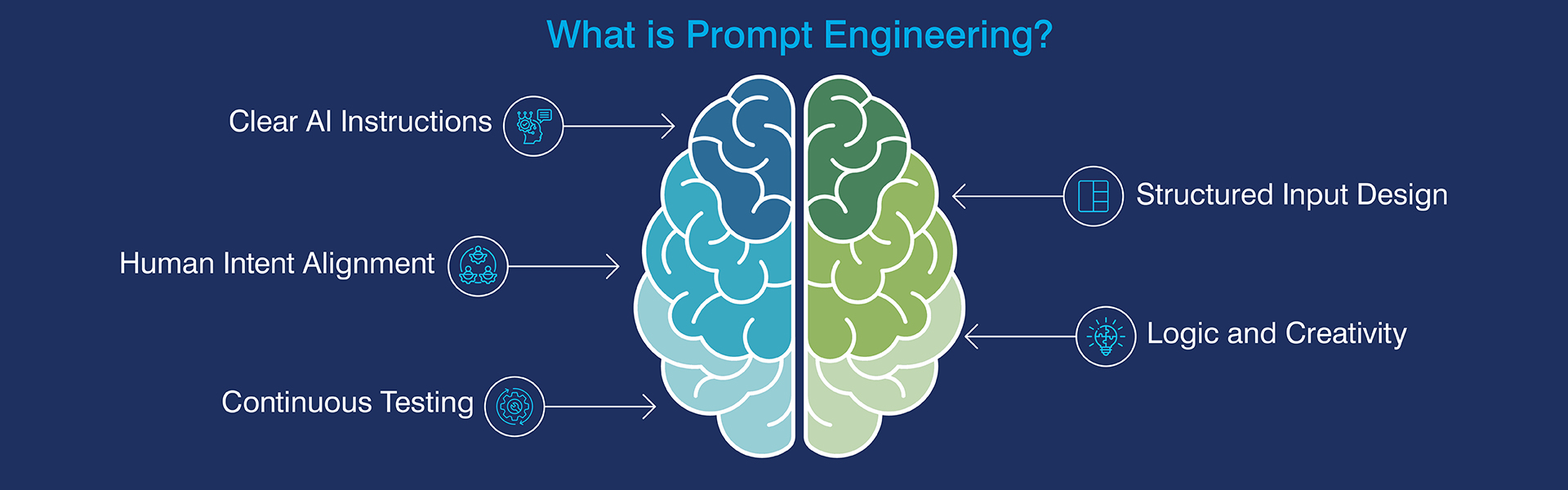

What is prompt engineering

Prompt engineering is the process of designing and refining inputs (prompts) to guide large language models (LLMs) like GPT toward accurate, relevant, and context-aware outputs. It involves structuring instructions, context, and constraints so the model interprets intent correctly improving reliability, response quality, and alignment with specific business or technical goals.

Ask for what you want, simple: Need a short answer? Say “Give a one sentence answer.” Need JSON? List the fields and format. Better prompts lead to smoother workflows and fewer do overs.

Why prompt engineering matters for AI/ML companies

Prompt engineering is crucial for AI and ML companies because raw LLM outputs without structured prompts often lead to inconsistent, biased, or irrelevant results. Without precise guidance, models waste compute resources, generate hallucinations, and amplify bias hurting the performance and reliability of the model.

Structured prompt engineering resolves these challenges by improving model understanding, reducing errors, and enabling faster fine-tuning cycles. AI and ML companies benefit from improved ROI on AI initiatives and more dependable outputs for mission-critical workflows.

For instance, in autonomous driving, accurate prompts help models interpret sensor data safely; in eCommerce, they ensure precise product categorization and personalized recommendations.

Overall, prompt engineering transforms AI from unpredictable experimentation into a consistent, scalable, and business-aligned decision-making tool.

Explore enterprise-grade prompt design and testing frameworks for better AI interactions.

Know more here »Different types of prompts: Key examples and applications

Explore various types of prompts used in prompt engineering each designed to improve AI understanding, accuracy, and context handling for domain-specific, reliable, and explainable model outputs.

Popular types of synthetic data and how they are generated

| Prompt Type | Description | Use Case / Example |

|---|---|---|

| Instructional Prompts |

|

|

| Zero-Shot Prompts |

|

|

| Few-Shot Prompts |

|

|

| Chain-of-Thought Prompts |

|

|

| Role-Based Prompts |

|

|

| Contextual Prompts |

|

|

| Multimodal Prompts |

|

|

| Goal-Oriented Prompts |

Define a clear objective or outcome to focus model responses. |

Generate a marketing email that increases click-through rates. |

A clear understanding of different prompt types empowers your AI/ML teams to craft precise, context-aware instructions. It also enhances model accuracy, reliability, and domain-specific performance across diverse business applications.

Poor prompt Vs Optimized prompt

The shift from vague instructions to refined, data-driven prompts delivers immediate, measurable value. In fact, research shows that structured, optimized prompts can reduce API costs by up to 76% while maintaining output quality, a critical factor for enterprise-scale AI deployment. This table compares poor and optimized prompts across quantifiable performance metrics for superior AI outcomes.

| Aspect | Poor Prompt | Optimized Prompt |

|---|---|---|

| Output Accuracy |

|

|

| Response Relevance |

|

|

| Model Efficiency |

|

|

| Domain Adaptation |

|

|

| Business Impact |

|

|

| User Experience |

|

|

Design, test, and deploy prompts that perform at scale to advance your AI capabilities.

Connect with our prompt engineers. »The business value of prompt engineering for AI/ML companies

Explore how prompt engineering delivers measurable business value by reducing model training costs, accelerating AI deployment, ensuring domain adaptation, and generating quantifiable ROI across enterprise-scale AI initiatives.

1. Reduced model training costs

Optimized prompts enable AI models to deliver accurate outputs without extensive retraining or fine-tuning. By aligning prompts with domain-specific instructions, companies reduce compute cycles, storage needs, and annotation costs. This precision-driven approach maximizes model efficiency, enabling scalable experimentation with minimal infrastructure investment and faster model iteration at lower expense.

2. Faster AI deployment

Structured prompts accelerate AI system rollout by minimizing manual configuration and model calibration. They shorten time-to-value through reliable, context-aware outputs from pre-trained LLMs. Teams can quickly integrate optimized prompts into production pipelines, reducing development overhead, improving deployment velocity, and ensuring consistent performance across applications and operational environments.

3. Consistent domain adaptation

Prompt engineering enhances domain adaptation by embedding industry-specific terminology and operational context directly into model instructions. This controlled conditioning ensures consistent responses aligned with regulatory, linguistic, or procedural nuances of each domain. Enterprises achieve higher model reliability and easier cross-domain scaling without repeated fine-tuning or separate model versions.

4. Quantifiable ROI

Well-engineered prompts produce consistent, measurable outcomes that directly impact ROI. They reduce resource wastage, optimize output accuracy, and lower human validation costs. Organizations gain tangible financial value through faster decision cycles, improved automation accuracy, and minimized post-processing, all translating to sustained operational efficiency and competitive advantage in AI-driven environments.

Strategic prompt engineering transforms AI into a measurable business asset, cutting costs, accelerating deployment, improving adaptability, and delivering clear, data-backed returns on AI investments.

5 best practices and tips for effective prompt engineering

Effective prompt engineering relies on structured strategies that improve precision, control, and interpretability. These proven techniques optimize model performance and ensure consistency across enterprise-scale AI implementations.

1. Define roles and context

Assigning a role in the prompt, such as “You are a financial analyst,” provides the model with clear context and task framing. This technique aligns responses with expected domain tone and reasoning scope. Role-based prompts minimize ambiguity, guide model interpretation, and enable predictable behavior across specialized applications, enhancing output consistency and domain accuracy.

2. Use chain-of-thought reasoning

Encouraging step-by-step reasoning through chain-of-thought prompting improves logical coherence and interpretability. It helps models process complex queries by breaking them into sequential reasoning paths. This method supports analytical tasks, structured problem-solving, and ensures that intermediate reasoning steps align with factual data, leading to traceable and verifiable outputs in high-stakes applications.

3. Apply few-shot and zero-shot prompting strategically

Zero-shot prompting works best for generalized tasks, while few-shot examples improve model grounding in domain-specific patterns. Combining both techniques balances efficiency and contextual precision. Few-shot prompts guide structured reasoning with minimal examples, reducing retraining needs while maintaining adaptability across varied tasks and industries.

4. Anchor context and control randomness

Providing metadata, schema, or structured context helps the model stay grounded in factual constraints. Adjusting temperature and top-p parameters ensures deterministic outputs where consistency is critical. Together, these controls balance creativity with predictability, preventing semantic drift in production environments.

5. Test iteratively and prevent prompt leakage

Prompt testing is essential for clarity, accuracy, and robustness. Iterative refinement exposes weak phrasing and logic gaps. Enterprises must also guard against injection attacks by isolating prompts from user-controlled input layers, ensuring model reliability and compliance with data integrity standards.

Applying these structured practices ensures prompt reliability, interpretability, and resilience, essential for deploying high-performing AI systems that meet enterprise standards of accuracy, security, and consistency.

Start implementing structured prompts that deliver consistent, high-quality AI results.

Contact us now »Top 5 prompt engineering tools and frameworks

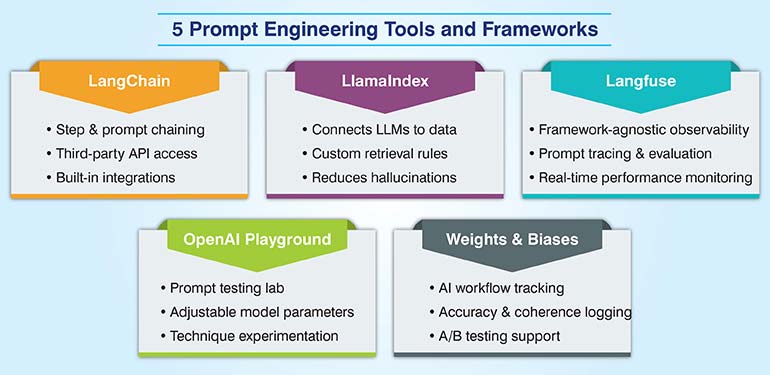

Prompt engineering does not require you to start with a big tech-heavy stack. That is one of its core advantages. You can start with openly available tools that cover everything, from quick tests to production setups.

1. LangChain is versatile and helps to chain steps and prompts with full visualization, also allowing access to APIs of third party providers. LangChain has many integrations that work out of the box and also some specially crafted for engineering and structuring prompts.

2. LlamaIndex is one of the best for connecting an LLM to structured datasets. You can define indexes, set the retrieval rules and easily pass context to the prompt to reduce hallucinations and ensure responses are based on data.

3. Langfuse is a commercial, framework-agnostic observability platform for prompt engineering. It offers tracing, evaluation, and version control for prompts, helping teams monitor, debug, and optimize LLM performance in real-time production environments.

4. OpenAI Playground is a laboratory for prompts and has been built with that aim in mind. You can try out almost every technique and formats, adjust model parameters and test responses.

5. Weights & Biases is a platform through which you can also access other tools, and by itself it is dedicated to helping you produce AI. You can log accuracy, coherence, and factual checks. And the side-by-side views help compare small prompt edits. It is handy for both A/B tests and model swaps.

Common prompt engineering pitfalls and how to avoid them

Even skilled AI teams encounter prompt engineering pitfalls that degrade model performance. Understanding these challenges and applying structured mitigation techniques, ensures precision, reliability, and reproducible AI outcomes.

| Challenge | Impact | Solution |

|---|---|---|

| Ambiguous Prompts |

|

|

| Overly Broad Queries |

|

|

| Lack of Domain Context |

|

|

| Prompt Injection Risks |

|

|

| Ignoring Model Parameters |

|

|

| Lack of Testing |

|

|

By proactively addressing these pitfalls, AI/ML teams improve prompt stability, safeguard data integrity, and achieve consistent, domain-aligned results that scale effectively across enterprise applications.

Real-world examples of prompt engineering in action

Ecommerce / Retail: Using prompt templates that carry your brand voice, product attributes and buyer intent will help increase upsell rates without needing to retrain the AI model. A clear prompt like: ‘You are an ecommerce vendor. Considering the user’s attributes and views history, suggest three complementary items. Return JSON {item_id, reason}’, will keep results relevant to the user. You measure what is important to you: clicks, add-to-cart events, or even valid JSON. Context anchoring and prompt optimization will give you clear ROI.

Healthcare: Good prompts also help doctors to read and analyze notes faster, extract and organize priority information that’s needed for correct diagnosis. A short prompt assigning the role of a ‘clinical scribe’ and asking for, say five numerical bullets citing source sections for each point, and flagging any uncertainty can help immensely. The clinician needs to check facts against the chart, verify redaction of any sensitive fields and rework and edits in documentation will drop.

Banking and Finance: With intelligently built prompts, a model can scale fraud review as it gathers transaction patterns, device history, merchant risk and other signals, and rank signals on a scoreboard with reasons. With accurate tracking, recall, and explanations analysts can be deployed only to check the riskiest cases, cutting out the noise.

Customer Support: Router prompts work to classify tickets, extract product and severity, list steps that have already bee taken, and also nudge the AI to ask clarifying questions, if necessary, context is incomplete. You need to monitor first-response time and routing accuracy and whether the outputs match the schema. This reduces misroutes and leads to quicker resolution of customer issues.

Future of prompt engineering

Current prompt engineering workflows are already moving towards a blend where light training of the AI model is included. While carefully engineered prompts can take you very close to your goal, small adapters or prompt tuning close the gaps that appear during complex tasks.

With AGI gaining traction we now have autonomous agents that write and test prompts on their own. They run quick A/B checks, pick a winner, and log what changed, while teams set goals and rules. With sharing of formats for roles, context blocks already in vogue and output schemas getting more consistent, we will soon have reviewed prompt libraries you can install like packages.

However, all of these will always need human review and adaptation to fit your specific use case. Also, autonomous agents are unreliable under current circumstances and often run off doing tasks they were not asked to do, or not expected. One of the best solutions, again, is prompt engineering for the agents themselves.

Conclusion

AI and ML companies can raise the reliability of their AI models if they hire expert prompt engineers. They bring to table clear roles, focused context, and simple output rules to turn impromptu guesswork-based prompting into result-oriented inputs. They structure the prompts to make the LLMs work faster without straying from context or goals.

HabileData builds and tunes prompts for measurable gains. We use simple templates, test small variants, and track accuracy, cost, and format stability. You get fewer rewrites, better decisions, and results you can repeat across teams.

We help you solve all prompt engineering issues. If you want help getting started or run a full scale prompt engineering project to tune your AI model or app, get our team on board or send a query.

Want to build, optimize, and scale prompt libraries that drive precision and productivity?

Streamline your AI workflows »

Snehal Joshi heads the business process management vertical at HabileData, the company offering quality data processing services to companies worldwide. He has successfully built, deployed and managed more than 40 data processing management, research and analysis and image intelligence solutions in the last 20 years. Snehal leverages innovation, smart tooling and digitalization across functions and domains to empower organizations to unlock the potential of their business data.