Human-in-the-Loop (HITL) enhances AI-driven data annotation by combining human expertise, ensuring accuracy and quality. This collaborative approach addresses AI limitations, streamlines training data refinement, and accelerates model development, ultimately boosting performance and enabling more reliable, real-world applications.

Contents

- Understanding the human-in-the-loop in annotation projects

- How HITL boosts the performance of AI-driven data annotation

- 10 step human-in-the-loop implementation for data annotation

- 7 Best Practices for HITL Annotation

- Real-world applications of the HITL process

- Future of HITL in AI-driven data annotation

- Conclusion

As AI technologies revolutionize industries across the board, the insatiable hunger of AI algorithms for high quality labelled data at scale demands redressal. Manual data annotation being impractical for handling large datasets, has made way for AI-driven data annotation which accelerates the overall annotation workflow.

But AI isn’t 100% accurate and makes mistakes. This can happen due to factors ranging from hardware capacity to token limits or the lack of fine tuning or inferior annotation quality in model training data.

Integrating human expertise in the AI workflow through the Human-in-the-Loop approach strengthens the potential of AI algorithms. Human judgment enables AI systems to navigate complex data and make more informed decisions. It applies contextual knowledge, cultural understanding, and nuanced judgment, helping to mitigate the risks associated with biased or incomplete datasets. Consequently, HITL enhances the accuracy and reliability of AI algorithms.

In this article, we explore the hurdles faced in achieving quality in AI-driven data annotations, and how augmenting human intelligence into the loop enhances performance of AI applications.

Understanding human-in-the-loop in annotation projects

Incorporating human expertise in the AI workflow through human-in-the-loop enables AI systems to navigate complex data and make informed decisions.

Human in the Loop (HITL) is a collaborative approach. It combines human intelligence with machine algorithms, enabling continuous learning, validation, and improvement of AI systems. In this process, human annotators are a part of the machine learning workflow. They work alongside automated processes to achieve more accurate and reliable results.

Leveraging human expertise, intuition, and contextual understanding, the HITL model overcomes the limitations of AI algorithms. This happens especially in scenarios involving complex or ambiguous data. It works as a collective effort between ML algorithms and human intelligence.

How HITL boosts the performance of AI-driven data annotation

Human-in-the-Loop (HITL) creates an iterative feedback loop to refine algorithms, reduce errors, and enhance overall model accuracy. This leads to more reliable and effective AI systems. Here are some of the ways HITL benefits AI projects.

1. Improved accuracy and quality of annotations

Human experts understand ambiguous and complex data better than machines. They can spot and resolve errors that are difficult for machines to understand. Automated systems can make mistakes, especially when dealing with complex or noisy data. HITL allows human annotators to identify and correct errors made by automated systems. It ensures higher accuracy and minimizes the risk of incorrect annotations.

Also, machine learning models can’t work without sufficiently labeled data. In such cases, the HITL approach helps. Take the case of a requirement to work with a language spoken by a limited number of people. Here, the ML algorithms may not find enough examples to learn. The accuracy of such rare data is established through HITL.

2. Better tuned for subjectivity and contextual understanding

Without human involvement, the annotations may lack nuances, contextual understanding, and subjective interpretations. Contextual understanding is essential in data annotation because it allows for accurate and meaningful labeling of data. It helps annotators interpret and comprehend the data within the appropriate context, resulting in more precise annotations. Human annotators can leverage their contextual understanding and domain expertise to resolve any ambiguity, enhancing the quality and accuracy of annotations.

Human annotators with expertise in understanding cultural and social norms can handle subjective judgments effectively. They can evaluate content based on context, intent, and potential harm. For example, in sentiment analysis, the sentiment expressed in a sentence can vary based on the context. Understanding the broader context helps assign the correct sentiment label. Even with natural language processing tasks, the annotators understand the sentence structure, semantics, and the broader context. This helps to correctly label the intended meaning of a word with multiple interpretations.

3. Edge case resolution

Some data annotation tasks involve challenging edge cases that require human judgment, intuition, or reasoning. These cases may be difficult to handle accurately without human involvement, potentially affecting the reliability and performance of models trained on the annotated data. Humans can identify and annotate these edge cases correctly, preventing the model from making erroneous predictions or generalizing poorly. Their involvement ensures that rare or challenging instances are accurately labeled or classified. It allows the machine learning model to handle such cases more effectively and make informed predictions.

Suppose there is an image with a blurry object that could be either a dog or a cat. An annotator with contextual understanding can analyze the surrounding context. This could include the shape of the object, its color, and any visible feature that could help to resolve ambiguity.

4. Continuous improvement

Without human input, it can be difficult to adapt to changes, update annotation guidelines, or handle novel situations as they arise. HITL facilitates an iterative feedback loop between human annotators and automated systems. Human annotators can provide insights, guidance, and feedback to improve the performance of automated systems. This collaborative process leads to ongoing improvements in accuracy and quality over time.

5. Active learning and querying

HITL systems can leverage active learning techniques to optimize the annotation process. Instead of randomly selecting instances for annotation, the model can query humans for annotations on uncertain or challenging examples. By focusing human effort on the most informative instances, annotation accuracy is improved while reducing annotation effort.

6. Quality control

HITL allows human annotators to adhere to specific quality control measures and annotation guidelines. They can review, validate, and ensure that the annotations meet the desired quality standards. This reduces errors, inconsistencies, and false positives/negatives caused by incorrect or inadequate annotations.

There are multiple ways in which quality can be checked for data annotation using HITL.

For instance, when multiple annotators label the same data instance, differences in their annotations can arise. This can be resolved by involving a third-party annotator or an expert to make a final decision. This helps ensure consistency and resolve any conflicts in the annotations.

For challenging or subjective tasks, employing consensus-building techniques can enhance the reliability of annotations. This can involve having multiple annotators label the same data and reaching a consensus through discussions or voting mechanisms. Consensus-based annotations reduce the impact of individual biases and increase the accuracy of labeling.

Project managers or supervisors can monitor the annotation process to ensure adherence to guidelines, answer questions, provide support, and address issues. Regular communication and supervision help maintain the quality of annotations throughout the project duration.

Unlock the secrets of effective image annotation

Read our comprehensive guide now »10 step human-in-the-loop implementation for data annotation

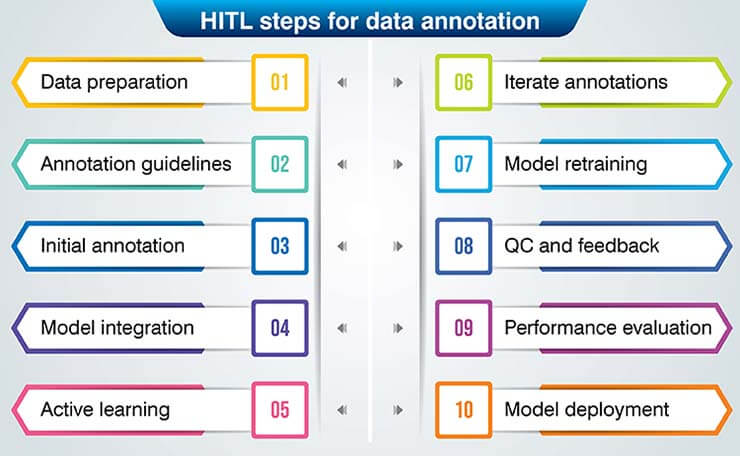

Through HITL and AI combined annotations, we trained the AI to identify different human faces accurately. Here are the 10 steps we used to achieve better accuracy:

Data Preparation: We started the project by actively gathering raw data. This involved collecting thousands of human face images from various sources. We planned our data collection and set up monitoring systems to ensure a diverse and representative set of images that also ensured more comprehensive training data and mitigated risks of biases.

Annotation Guidelines: Next, we crafted clear and detailed annotation guidelines. These instructions guided our human annotators on how to label different facial features, such as eyes, nose, mouth, and the outline of the face.

Initial Annotation: We then manually annotated a subset of the images according to our guidelines. This initial annotation served as the training data for our machine-learning model.

Machine Learning Model Integration: With our initial annotations in hand, we trained a machine learning model. The model learned to recognize facial features based on our human annotations.

Active Learning: We then implemented active learning techniques to select the most informative and uncertain examples from our unlabeled data. These were the images that the model was least confident about, and where it could learn the most from additional human annotation.

Human Annotation Iteration: We assigned the selected images to our human annotators, who diligently reviewed and annotated the data based on the guidelines. This iterative process of human annotation and machine learning

Model Retraining: We took the updated labeled dataset, including the new annotations, and retrained the machine learning model. This iterative process of annotation and retraining helped the model to learn from its mistakes and improve over time.

Quality Control and Feedback: We regularly checked for annotation consistency and accuracy. Constructive feedback helped our human annotators improve their work and correctly follow the annotation guidelines.

Performance Evaluation: We tested the machine learning model’s performance using a separate validation dataset. This allowed us to assess how well the model was likely to perform on new, unseen data.

Deployment and Production: Once the model met all benchmarks during evaluation work, we deployed it for real-world applications to identify human faces. We continued to monitor the model’s performance in the real world and collected more data for further annotation and retraining as needed.

Human-in-the-loop was extremely important at every step of the data annotation project. The iterative process of human annotation, machine learning, and active learning ensured that the final AI model and computer vision system were accurate, reliable, and capable of improving over time.

7 best practices for HITL annotation

- Clear Annotation Guidelines: Well-defined annotation guidelines are essential to ensure consistency and accuracy.

- Annotator Training and Communication: Invest in training annotators to ensure they understand the task requirements, guidelines, and any domain-specific knowledge.

- Quality Control Mechanisms: Implement robust quality control measures to monitor and maintain annotation quality.

- Iterative Feedback Loop: Establish a feedback loop between annotators and the machine learning model to improve the model’s performance over time.

- Bias Mitigation: Take measures to minimize annotation bias by designing guidelines that focus on objective criteria, conducting bias audits, and providing clear instructions on potential biases.

- Task Decomposition and Automation: Break down complex annotation tasks into smaller subtasks to distribute the workload among annotators effectively.

- Regular Evaluation and Feedback: Continuously evaluate the annotation quality and model performance.

Real-world applications of the HITL process

The HITL process finds applications in various real-world scenarios where human expertise and automated systems collaborate to improve outcomes. Here are some examples of how HITL is applied in different domains:

Healthcare

By combining human expertise with machine automation, HITL helps ensure the accuracy, quality, and safety of healthcare processes, ranging from medical imaging to EHR analysis and clinical trials.

- Medical image annotation: In medical imaging, HITL is used for tasks such as image annotation, segmentation, and lesion detection.

- Electronic health record (EHR) Analysis: HITL is applied in the analysis of electronic health records to extract relevant information and annotate patient data.

- Clinical trials and adverse event reporting: HITL is used in the annotation and review of adverse events and patient outcomes in clinical trials.

- Histopathology annotation: HITL is applied in histopathology, where human annotators collaborate with automated systems to annotate and analyze tissue samples.

- Radiology report generation: HITL is used to improve the accuracy and quality of radiology reports generated by automated systems.

Natural Language Processing

HITL is extensively used in Natural Language Processing (NLP) to improve the accuracy and quality of language-related tasks.

- Sentiment analysis: In sentiment analysis, HITL is used to refine and validate the sentiment labels assigned to text data.

- Named entity recognition (NER): Human annotators review and correct the automated system’s predictions, ensuring accurate recognition and categorization of named entities. This improves the precision and recall of NER models.

- Text categorization and classification: HITL is used in text categorization tasks, such as topic classification or document classification.

- Machine translation: HITL plays a significant role in machine translation tasks. Human annotators review and post-edit the machine-translated text to ensure grammatical correctness, fluency, and accurate translation of the intended meaning.

- Text summarization: HITL is used in text summarization tasks to generate concise and informative summaries.

Social Media

HITL is extensively employed in social media applications to improve accuracy, moderation, and user experience.

- Content moderation: Social media platforms leverage HITL to enhance content moderation efforts.

- Hate speech and toxicity detection: HITL is used to tackle hate speech and toxic behavior on social media platforms.

- User profile verification: HITL is applied in user profile verification processes on social media platforms.

- Personalized recommendations: HITL is used to improve personalized content recommendations on social media platforms.

- Spam detection and filtering: HITL is employed to combat spam and unwanted content on social media platforms.

- Quality assessment and feedback: HITL is utilized to assess the quality of user-generated content and gather feedback.

E-commerce

HITL is widely utilized in the e-commerce industry to improve various aspects of the online shopping experience.

- Product categorization and catalog management: HITL is applied to categorize and organize product listings in e-commerce platforms.

- Image and video annotation: HITL is used to annotate and enrich product images and videos.

- Customer reviews and ratings: HITL is employed to moderate and validate customer reviews and ratings for products.

- Product recommendations: HITL is used to refine and validate product recommendation algorithms.

- Fraud detection and prevention: HITL is applied in fraud detection and prevention measures in e-commerce.

- Virtual shopping assistants: HITL is used to improve virtual shopping assistants or chatbots.

Future of HITL in AI-driven data annotation

While automated algorithms have made significant strides in data annotation, the complexity and nuances of certain tasks still require human intervention. Looking ahead, HITL is expected to become increasingly sophisticated, with advanced AI systems guiding human annotators, streamlining the annotation process, and ensuring higher levels of accuracy.

As AI technologies continue to advance, HITL will evolve to facilitate increased collaboration and adaptive learning. Automation and machine learning will play a significant role in streamlining the annotation process, making it more efficient and scalable. Additionally, the integration of large language models and autonomous agents will enhance the capabilities of HITL, enabling more sophisticated and context-aware annotations. Higher accuracy, improved productivity, and the ability to handle complex datasets in diverse domains is ensured.

Conclusion

In the rapidly advancing field of AI-driven data annotation, Human-in-the-Loop (HITL) emerges as an essential strategy that significantly enhances the performance and reliability of automated annotation systems. HITL tackles the challenges posed by complex, ambiguous, or subjective annotation tasks through a collaborative approach.

The collaborative synergy between human annotators and intelligent algorithms leads to higher accuracy, improved efficiency, and increased scalability. Human annotators contribute their domain knowledge, contextual understanding, and critical thinking abilities, while AI algorithms provide automation, speed, and large-scale processing capabilities. The iterative feedback loop between human annotators and automated systems refines AI models, reducing errors and ensuring continuous improvement.

As AI continues to evolve, the collaboration between humans and machines through HITL will play a pivotal role in driving innovation and advancement in AI-driven data annotation.

Rope in our expert annotators to leverage human interaction to improve the quality of training data for your annotation projects.

Connect with our experts today »